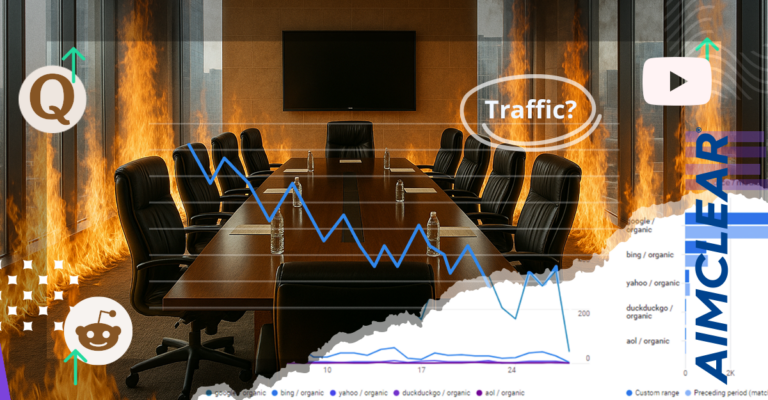

One thing you can guarantee in the SEO world: when Google Search introduces a major change, opinions will multiply like flies at a Fourth of July picnic.

So it was when Google announced BERT, it’s new “neural network-based technique for natural language processing (NLP)” in an October 25, 2019, blog post. When SEOs heard that it would affect one in ten searches on Google, it was all they needed to start speculating about how works and how to optimize for it.

If you’ve somehow missed what BERT is about, the links below will help you get up to speed. But here’s a tl;dr definition (spoiler alert?): BERT is a machine learning program that learns to recognize the relationships between words in a sentence. Applied to search, it helps interpret queries that contain word relationships previously ignored by search engines.

Now that the dust has settled on BERT, we’ve rounded up some of the best takes on it from the SEO community so you can learn what BERT actually is, what it does, and what (if anything) you should do in response to it.

Google on Google BERT

Understanding searches better than ever before – The Keyword Blog, by Google. This is the post that launched a thousand Sesame Street memes. Google’s official announcement that BERT is now being used to process search queries.

Open-sourcing BERT: State-of-the-Art Pre-Training for Natural Language Processing – The AI Blog, by Google. November 2018 announcement of the BERT open source project.

Will BERT affect my rankings or cause my site to lose visibility? Google Webmaster Central Hangout, YouTube. Link jumps to the segment of a video where a Google representative answers this question.

“Nothing to optimize for with BERT,” Danny Sullivan, Twitter. In this Twitter thread, Google’s Search Liaison explains why all sites need for BERT is the same thing Google has always said they should do: “write content for users.”

Journalistic Coverage of Google BERT

Welcome BERT: Google’s latest search algorithm to better understand natural language, by Barry Schwarz, Search Engine Land. Covers what was in the Google announcement, but adds more details about how BERT affects Featured Snippets.

Google BERT Update–What It Means, by Roger Montti, Search Engine Journal. Roger summarizes the takes of SEO experts Dawn Anderson and Bill Slawski. See below for more of their writing about BERT.

Google BERT Misinformation Challenged, by Roger Montti. Roger asks several SEO experts about various myths circulating about BERT.

Google is improving 10 percent of searches by understanding language context, by Dieter Bohn, The Verge. “When it comes to the quality of its search results, Payak [of Google] says that ‘this is the single biggest … most positive change we’ve had in the last five years and perhaps one of the biggest since the beginning.'”

Google brings in BERT to improve its search results, by Frederic Lardinois, Techcrunch.

Meet BERT, Google’s Latest Neural Algorithm For Natural-Language Processing, by Laurie Sullivan, MediaPost.

Bing is Now Utilizing BERT at a Larger Scale Than Google, by Matt Southern, Search Engine Journal. Surprise! It turns out Bing started using BERT for search results months before Google, and implemented it worldwide. At the time of Google’s announcement, they were only applying BERT to English results.

Expert Analysis of Google BERT

Google BERT and Family and the Natural Language Understanding Leaderboard Race by Dawn Anderson, Slideshare. Many well-known SEOs point to this slide deck from Dawn’s Pubcon Pro 2019 presentation as the best and most comprehensive explanation of BERT and how it works, as well as a thorough overview of Natural Language Processing as a whole.

A deep dive into BERT: How BERT launched a rocket into natural language understanding, by Dawn Anderson, Search Engine Land. A text version of Dawn’s in-depth SEO analysis of BERT. Also see this summary of a webinar on BERT that Dawn did for Search Engine Journal: BERT Explained: What You Need to Know About Google’s New Algorithm.

Context Clouds, Co-occurrence, Relatedness and BERT, by Dawn Anderson, #SEOisAEO podcast. In this May 2019 podcast episode hosted by Jason Barnard, Dawn reviews several academic papers that were the basis for the BERT project.

The Top 7 Things You Must Know About Google’s BERT Update, by Liam Carnahan, Content Marketing Institute. There are way too many articles out there speculating wildly about how to optimize your content for BERT. This post has recommendations actually based in what BERT does (and does not) do.

[BERT] Pretranied Deep Bidirectional Transformers for Language Understanding (algorithm) | TDLS, by Danny Luo, Toronto Deep Learning Series on YouTube. Video exploration of BERT recommended by Dawn Anderson.

Paper Dissected: “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” Explained, by Keita Kurita, Machine Learning Explained. A plain-English distillation of the main academic paper behind BERT.

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, by Minh Quang-Nhat Pham, Slideshare. Early slide deck overview of BERT from an artificial intelligence researcher.

Demystifying BERT: The Groundbreaking NLP Framework, by Mohd Sanad Zaki Rizvi, Medium. Good explanation of Transformers, one of the key principles behind BERT.

Bidirectional Transformers for Language, Google Scholar. A listing of academic papers about BERT.

Related Resources

Some opportunities to learn more about Natural Language Processing, the foundation underneath BERT:

Better Content Through NLP (Natural Language Processing), by Ruth Burr Reedy, Moz Whiteboard Friday. In this video (with transcription), Ruth explains how Google uses NLP to better understand web content and return better search results.

Natural Language Understanding (IBM) and Natural Language (Google), two free API resources for testing and using NLP on your own.

Smaller, faster, cheaper, lighter: Introducing DistilBERT, a distilled version of BERT, by Victor Sahn, Medium. Since BERT is open source, anyone can use it, not just Google. This post presents a method for using BERT to do your own NLP that is less resource intensive.

While there may be no way to optimize for BERT, the experts cited above all agree that understanding the strides search engines are making toward true natural language understanding is essential for SEO in 2020 and beyond. Summed up, their most common recommendations for the Natural Language Processing age are:

- Create content that is comprehensive and useful for your audience, rather than traditional “SEO writing” (such as worrying about how many keywords are on the page.

- Use semantically-rich terms and semantic markup to enable search engines to better understand what your site is about.

Do you have any BERT or NLP resources you’d add to our list? Have some thoughts on how SEOs should respond to BERT? Let us know in a comment or on social media.