Before starting, we’re not in any way minimizing the importance of: content quality, the human element, shift in search behavior, and/or sufficient attention to site performance. They’re all crucial elements. We guarantee an overemphasis on technical jargon in this post. Our focus is purposeful and our topic designed to be narrow, focused on vector embeddings.

A couple of years ago, we dropped a blog post loaded with tactical substance. Soon after, an industry contact messaged with a question so off-base it felt like they’d skimmed a headline and guessed the rest. Before I could respond, they offered, “I didn’t read it, ran it through ChatGPT to get a summary.”

That moment landed. ChatGPT missed the key insights. More clearly stated: we handed the post as copy and expected structured intel. No prompt tuning. No embedded vectors. No architecture built for signal amplification. The machine returned surface-level noise because we fed it noise. While we suggest more sophisticated methods, if you do nothing else after reading this post, run your content through ChatGPT, Claude, Gemini, Llama, DeepSeek, and other models and tune your offering until models return the main points. Crude, but still better than you readers fully missing the point when they TL;DR it with an LLM.

You Will Be Assimilated. Resistance is Futile

Top-tier LLM and AI search professionals increasingly agree that modern SEO resembles data engineering more than classic keyword optimization. When paired with brand propagation, storytelling, and omnipresent pre-search visibility, especially creative that leaves a lasting mark, technical fluency with vectors and embeddings become essential. We’re constantly handling inquiries from SEOs wondering WTF to do. Some background is required to supply a cogent answer.

This article explores data, which may be behind AI search visibility in practical terms, for SEOs asking the uncomfortable but necessary question: “What changed, and where the fark did my cheese get moved to.

Ignore Vectors, Risk AI Invisibility

SEOs have been name-dropping, “Vectors” for years, mostly in conversations about semantic search and entity signals. The majority of SEO, AEO, AIO teams know vectors matter but seemingly can’t say why. Straight up: In 2025, most LLMs and AI-driven systems approximate meaning through vector representations, especially in generative summarization, similarity search, and re-ranking. Nail vectors, and you’re speaking an AI model’s language, potentially steering results. A vector is a fingerprint of the text, but instead of swirls and ridges, it’s direction and distance in high-dimensional space. Words or sentences that mean similar things get mapped close together. Different models generate these fingerprints differently.

Here’s a distinction that comes up in technical circles: people often use the terms “embedding” and “vector” interchangeably, but in precise terms, an embedding is the result of passing input (like text, images, or audio) through a model to get a numerical representation of its meaning. That output is a vector—a list of numbers in high-dimensional space. Embeddings are those vectors, shaped by the model’s architecture and training. So, while some describe “embedding” as the meaning and “vector” as the math, in most SEO and ML applications, they refer to the same thing: a vector that encodes meaning in a way machines can use.

In marketing terms, vectors are what let AI recognize patterns like “angry customer,” “price complaint,” or “Q4 holiday gift guide.” Vectors drive targeting, recommendation engines, personalization, and classification pipelines. They also get stored in a vector database, either your own or one managed by a platform. If you’re running your own stack using tools like FAISS, Pinecone, Weaviate, or Qdrant, the vectors live in your infrastructure. If you’re using hosted tools like Algolia, OpenAI’s embedding API without custom storage, Shopify’s semantic engine, or Azure AI Search, the vectors are stored in their systems, not yours.

AI systems like ChatGPT, Claude, and models such as Gemini encode content into embeddings, mathematical representations of meaning used for tasks like summarization, clustering, and retrieval. While traditional search engines like Google use semantic understanding and machine-learned signals to interpret content, the exact role of persistent embeddings in ranking remains less transparent. However, as Google evolves its AI Overviews and integrates Gemini more deeply, it’s reasonable to assume that embeddings play a growing role in how meaning is extracted and surfaced from content across the web, including your site.

Embeddings help machines approximate your content’s meaning. They inform how relevance is determined for summarization, clustering, or contextual answers. When your site is surfaced in AI chat or overviews, it’s often because that meaning has been captured as an embedding. But here’s the catch: that interpreted meaning may differ from your intended message. If you’re not influencing the way content is structured and signaled, embeddings may reflect third-party context, outdated assumptions, or misaligned signals. Let’s explore how to monitor those representations, influence the embedding process indirectly, and improve alignment with how LLMs interpret your site.

We don’t create embeddings directly. We create content that gets interpreted into embeddings by the systems that read it. So, while “embeddings” are the outcome, what we control is the input: well-structured site content, semantic metadata, internal relationships, and topical clarity. From that, LLMs and AI search systems generate embeddings that contribute to how your site is ranked, cited, summarized, or omitted. Put simply: You don’t embed. You build meaning. AI turns that meaning into math and uses it to predict what matters in a given context. That’s why controlling what goes in—structure, clarity, and intent—is the only way to influence what comes out.

For some marketers, especially those using AI-enhanced tools, evidence of how site content is being perceived and parsed as embeddings may already be baked in, just waiting to be harvested.

Are Embeddings Happening in Your Stack?

Many modern systems that handle visible or metadata content, like site search, chatbots, clustering, or auto-tagging, use some form of embeddings. Find out which systems are producing them, if any, and what content they’re touching. Ask your dev or product team, “Are we using AI in our site search?” If the answer is yes, ask what model it runs on. Apply the same questions to chatbots, classification tools, or internal tagging systems.

Then study what each system is embedding. Is it blog content? Support articles? Product attributes? That tells you where semantic processing is happening and where to begin managing for LLM visibility.

Even if you’re not directly shaping embeddings through AI-aware tools, your content is still likely being processed by LLMs. It’s just not on your terms. In those cases, the interpretation of your site may rely on third-party summaries, outdated copies, or loosely inferred context. That’s where brand dilution happens. Your brand isn’t invisible, but it isn’t clearly represented either. To reduce this risk, it’s worth influencing the structure, clarity, and signals of your content so that when LLMs embed and retrieve it, the version of your brand they surface is the one you intended.

Who Owns the Embeddings?

Start by evaluating what’s being embedded in your orginization, specifically, what LLMs and AI search systems are processing. Once you know where embeddings are, you need to know who can touch them. Who set it up? Who can export them? Can the model be changed? Without access, you can’t translate outputs, tune the system, or align the content with how models interpret it. Ask: “Can this tool export vectors?” If not, ask if the team can swap models or change how they’re generated. If yes, you’re one step closer to managing visibility. For each tool, write down who controls it. Get contact info. Create a short doc with tool name, owner, model, and export capability. That’s your starting point for future translation.

Once you’re using tools that generate or rely on embeddings, the next step is optimization within those systems. Good news! This is where your SEO expertise kicks in. Once you’ve identified where embeddings are generated and what content they represent, you treat those systems as a new optimization layer. Here’s the loop:

- Analyze the embeddings.

Use your tools, if supported, or export vectors to examine what content is being embedded, how it clusters, and whether it aligns with your topical intent. You’re looking for gaps, noise, or misalignment. - Make site-level changes.

Rewrite vague content. Tighten clusters. Add clearer relationships between pages. Adjust tagging and metadata that influence semantic models. - Regenerate embeddings.

If your tools allow, re-embed the updated content. If not, wait for the next crawl or refresh cycle. - Re-test visibility.

Use AI tools that simulate LLM output (e.g., GPT, Claude, or search-oriented QA tools) to see if your content is being represented more accurately. Look at summaries, citations, or structured answers. - Repeat as needed.

Embeddings reflect your content and can amplify its influence across AI contexts. Treat them as living infrastructure. Tune, test, tune again.

To analyze how your website content is embedded and interpreted by AI search engines and LLMs, and to optimize for better AI visibility, consider the following tools and workflows aligned with the guidance in this post.

Market Brew AI Overviews Visualizer

- Input your website URL and see how AI search engines, like Google’s AI Overviews, may generate and cluster embeddings from your content.

- Shows how your pages group into semantic embedding clusters.

- Indicates whether your content aligns with top-performing thematic clusters.

- Provides a model-driven realignment strategy with actionable content updates if your content is semantically distant from key clusters.

- Test revised content to assess semantic alignment improvements.

- Offers a visual model of how AI systems might interpret your site in vector space, which may make it possible to optimize for AI-driven rankings and summaries.

Embedding-Aware Site Search and Chatbot Tools

- Examples: Algolia with semantic search, OpenAI-powered tagging or classification systems, and embedding-based chatbots.

- These tools process your site’s content using embedding models similar to those used by LLMs and AI search engines, making your content more accessible and semantically interpretable by those systems.

- By using tools that encode your content into familiar mathematical formats, you increase your chances of being accurately summarized, cited, or surfaced in AI-driven outputs.

- Azure AI Search (Vector Search)

- Index your website’s content, generate embeddings using models like OpenAI or SBERT, and analyze how your content clusters in vector space.

- Supports similarity search, hybrid (keyword + vector) search, and can be extended for multimodal use cases.

- Can be used to test and refine how your content is embedded and retrieved.

- Provides enterprise-grade embedding analysis and can be integrated with your site to monitor and optimize for AI search visibility.

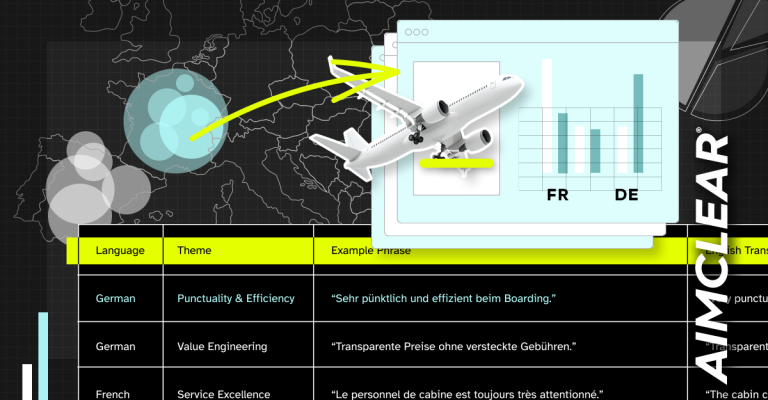

Vec2Vec Translation (from Cornell Research)

Harnessing the Universal Geometry of Embeddings is a research paper from Rishi Jha, Collin Zhang, Vitaly Shmatikov, and John X. Morris at Cornell. It proposes that many different language models, despite differences in architecture and training, encode meaning in geometrically similar spaces.

Using a method called Vec2Vec, the authors show how embeddings from one model can be translated into the representational space of another without paired training data. While primarily a contribution to theoretical ML and cross-model interoperability, the early findings suggest that AI systems may be more compatible in how they structure meaning than we previously thought. That opens the door for downstream tools to align older or siloed embeddings with newer model expectations.

The Cornell team’s Vec2Vec method offers a promising research direction: translating embeddings across models, even when those models don’t share architecture or training data. In theory, this means content processed by older or siloed systems could be made compatible with newer models—without rewriting or re-training. While this isn’t a plug-and-play SEO solution (yet), the insight reinforces a broader point: different AI systems may interpret content similarly, but not identically. As a practical step, audit the AI-enhanced tools in your stack such as chatbots, summarizers, or site search. Identify what content they’re embedding, what models they’re using, and where those interpretations may drift from your intent. That’s where semantic misalignment and optimization opportunity often begins.

- Translates embeddings from one model’s space to another, potentially helping your content be interpreted more consistently across different AI systems, even if they use different embedding models.

- If your site or tools rely on older embedding models, this technique may help align your vectors with those used by newer LLMs like GPT-4 or Claude, improving cross-system visibility.

This process of analyzing actualized embeddings, optimizing content based on those insights, and repeating the cycle gives SEOs meaningful leverage in AI-driven environments. Rather than guessing what Google or Claude might favor, guide how the next generation of systems interprets your site by shaping how your content is encoded into embeddings and interpreted.

You Got This

SEO has always been part art and part science. Today, the science part is more machine learning infrastructure than content hacks. You don’t need to become a data engineer but your team needs to think like one. Start by mapping your stack. Ask better questions about where embeddings live, who controls them, and how your content is being interpreted. The systems are already making decisions about you.

Note:Vectors shown in header image are example embeddings generated using ChatGPT. Locations on head are symbolic; not actual spatial encodings.