Facebook has taken a lot of heat for advertisers’ ability to target haters, extreme political views and other psychographic hyperbole. Recent media interest is amusing to us because we’ve been documenting each generation of Facebook Ads political extremism (2009) and other radical psychographic targeting for years.

LinkedIn has similar extremist targeting problems on the advertising side (LinkedIn Ads) and in human search (Sales Navigator). Read on for examples that include LinkedIn Jew haters, terrorist job titles and other nasty paid and organic (free) psychographic targeting.

Sales Navigator is LinkedIn’s powerful business search engine. With an inexpensive account, it’s easy to identify highly focused people all over the world by job title, employer, LinkedIn Groups and numerous organic (unpaid) psychographic targeting attributes. Sales Navigator is arguably even more powerful than ads because of our ability to send InMails, directly contacting LinkedIn users, let alone making friends. To me as a marketer, our ability to interact with a known person directly can be more powerful than ads. We’ll get to LinkedIn Ads paid targeting after Sales Navigator

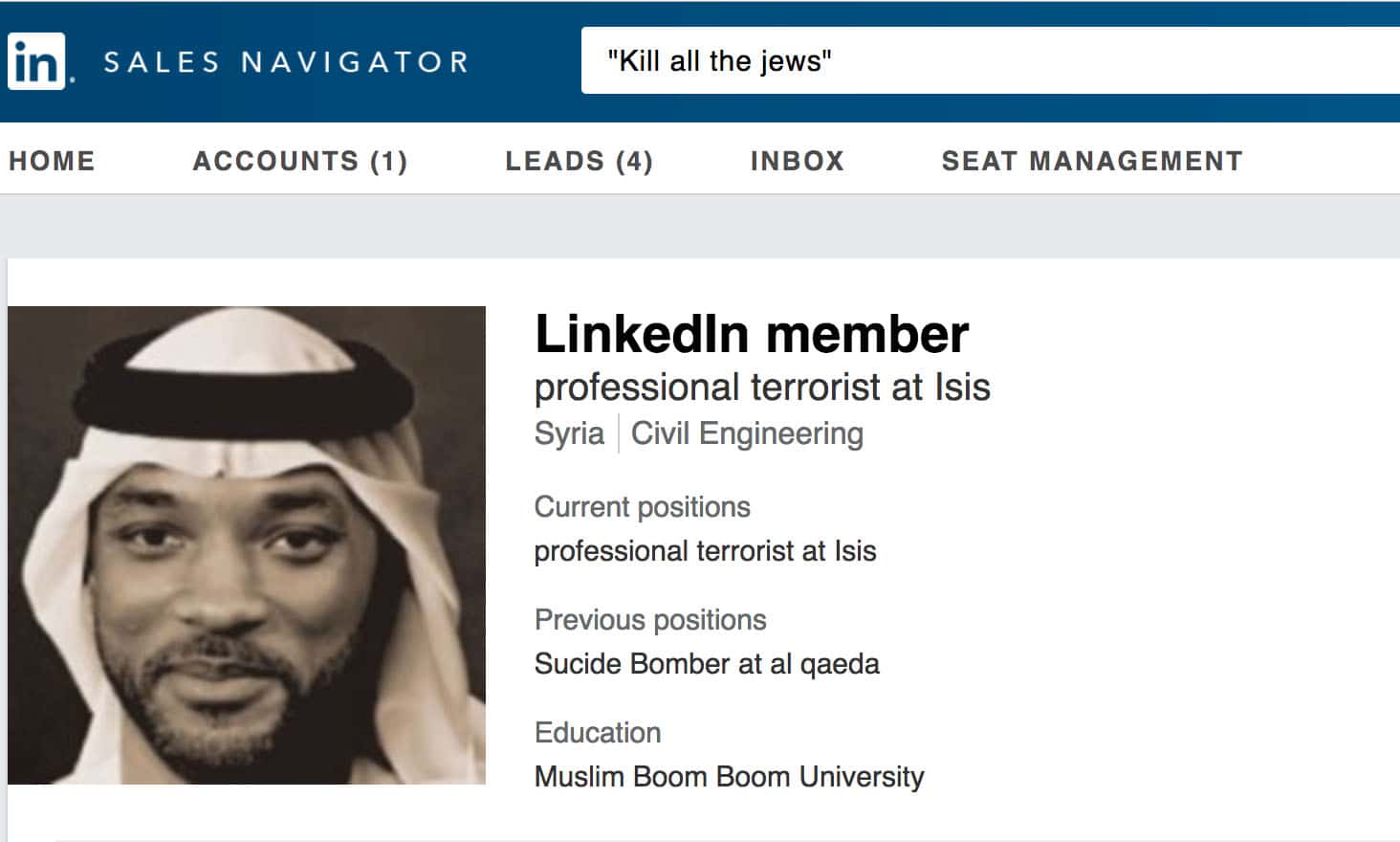

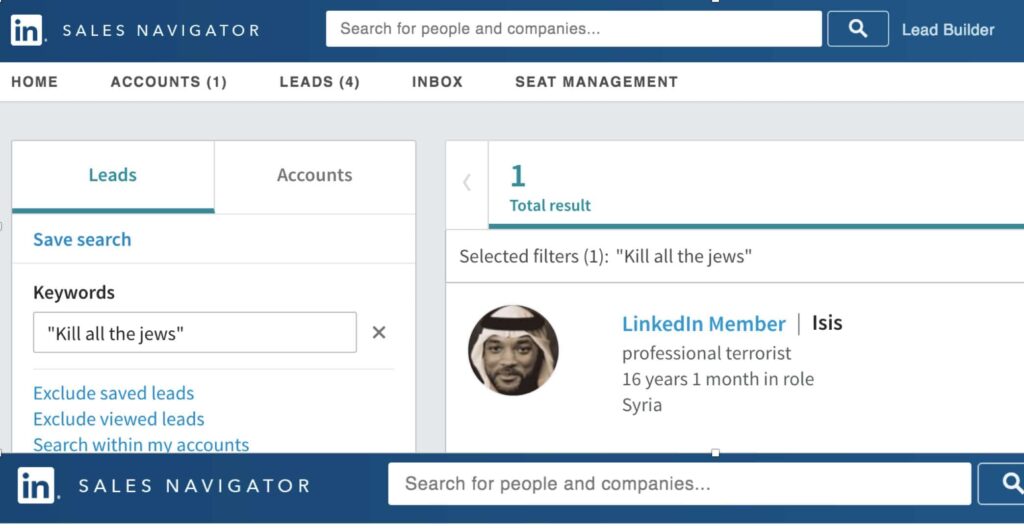

Job Title: Professional Terrorist, Country: Syria. We found this man by searching Sales Navigator for, “Kill all the Jews.” LinkedIn allows this job title.

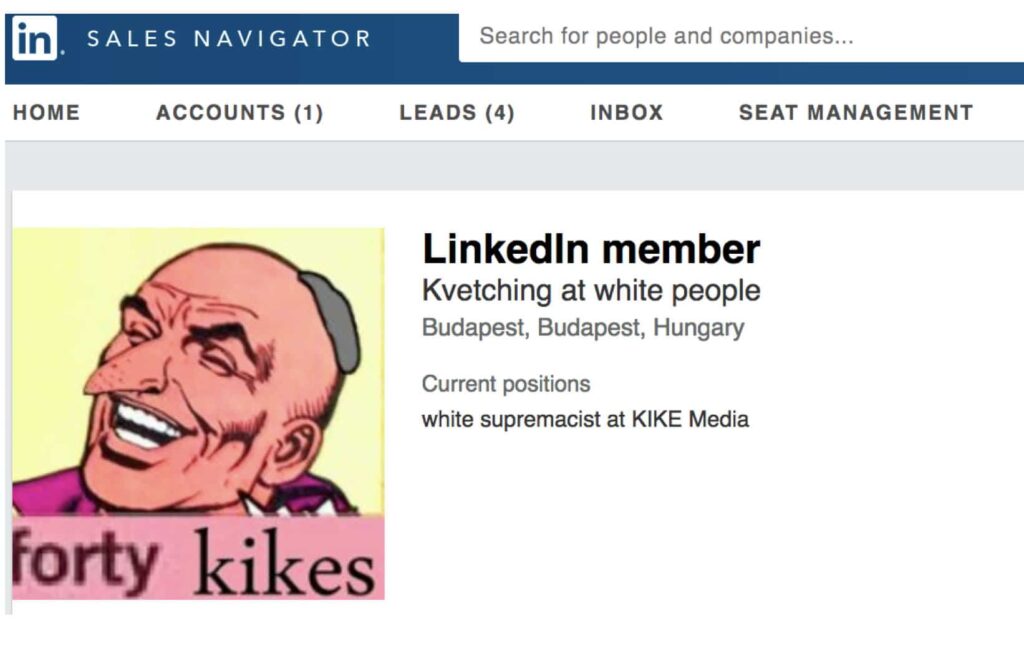

Job title: While Supremacist. It’s easy to contact this person directly via InMail.

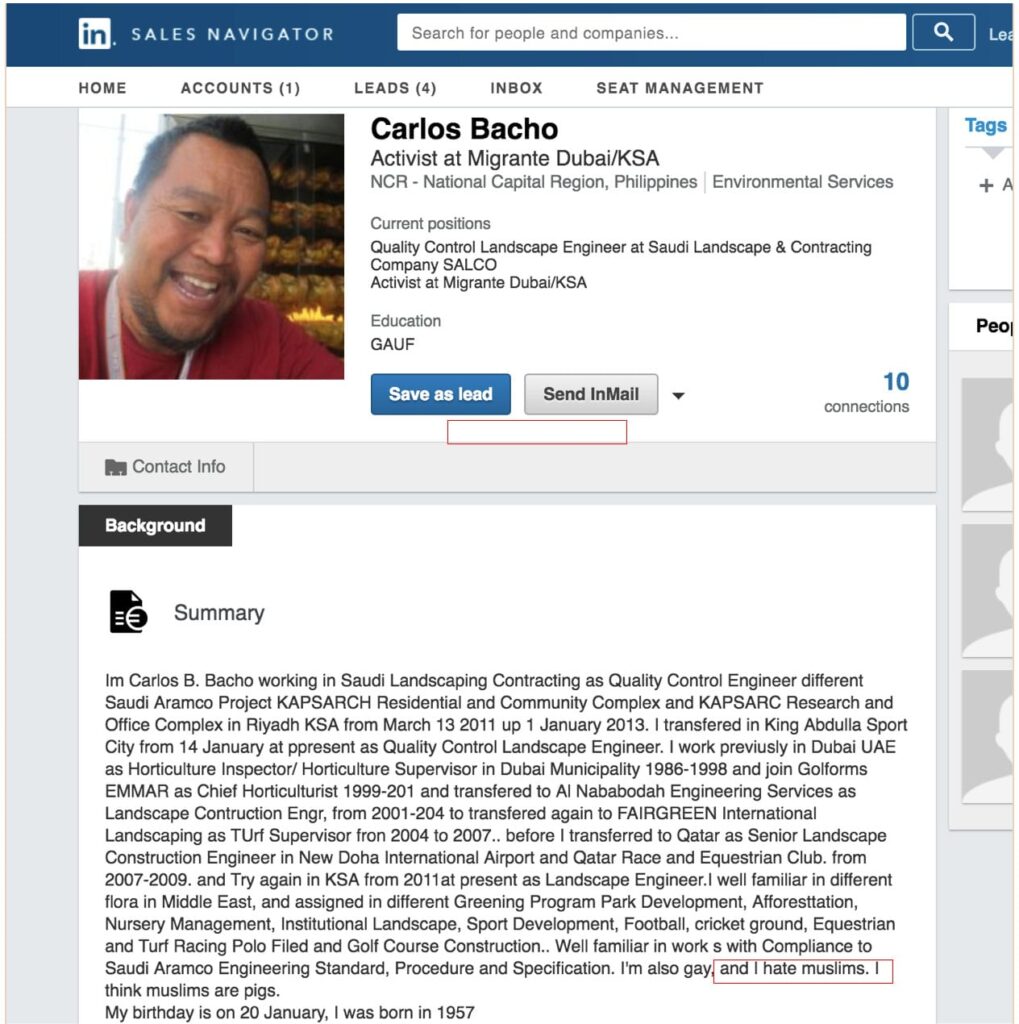

We searched Sales Navigator for, “I hate Muslims” and found this gem. Read Carlos Bacho’s profile carefully. He hates Muslims and thinks they’re pigs.

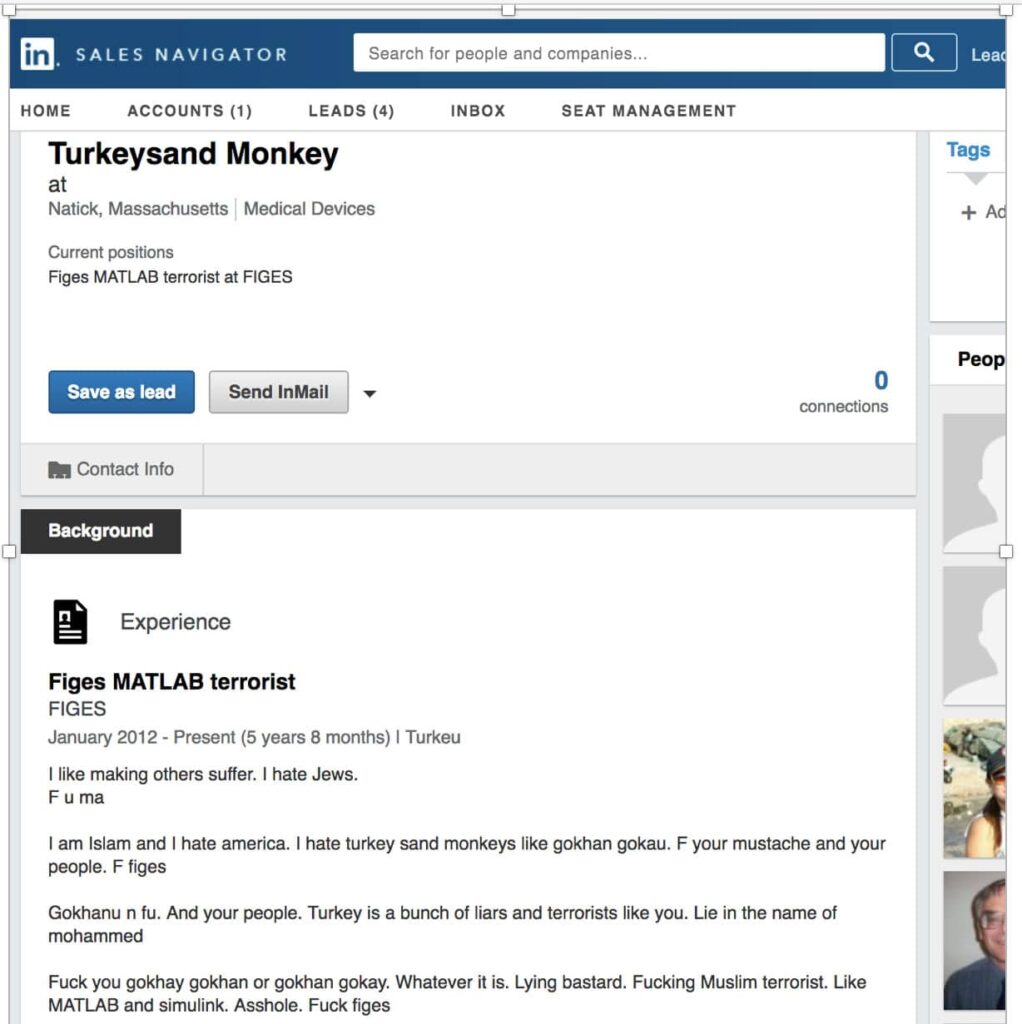

LinkedIn allows this person to give fake first and last names. Read through his profile… seemingly not a nice person. Meet him/her on LinkedIn. Meet for coffee.

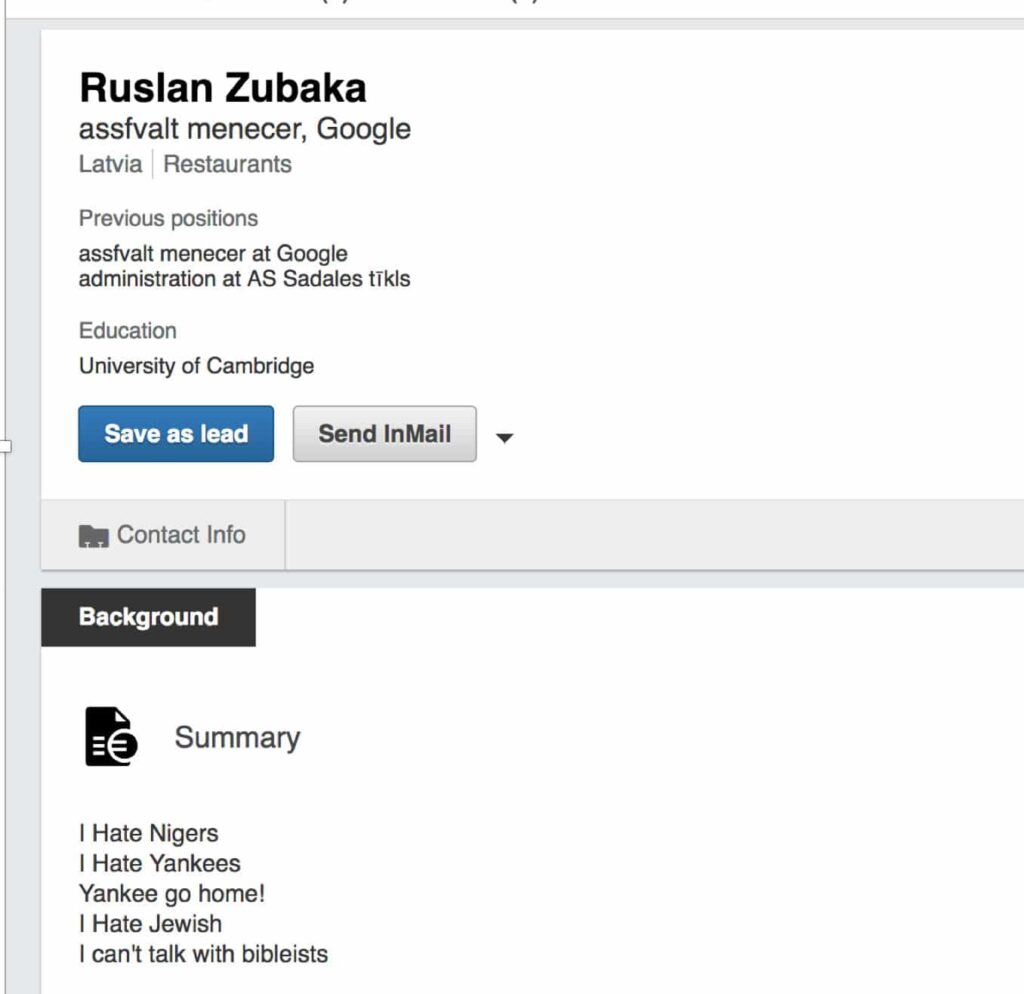

Ruslan has a lot of hatred. LinkedIn allows this profile.

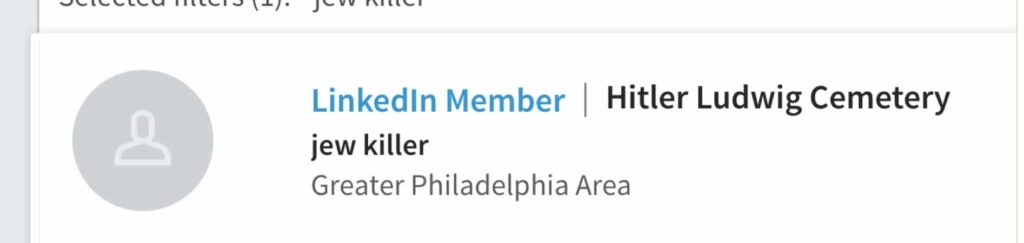

Job Title: Jew Killer

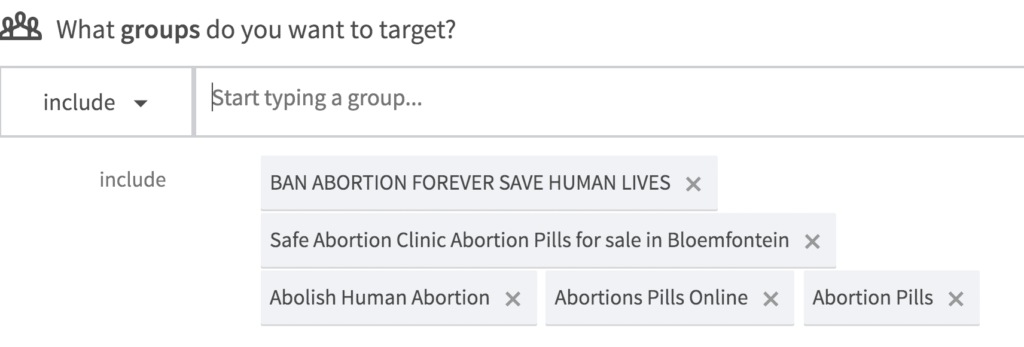

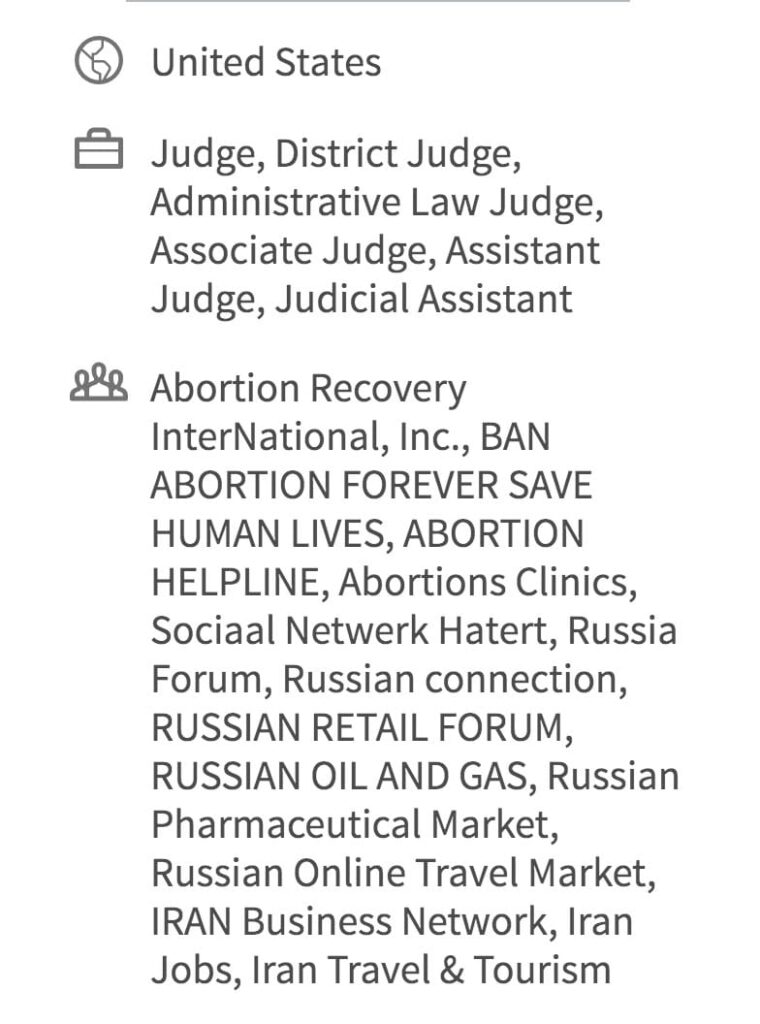

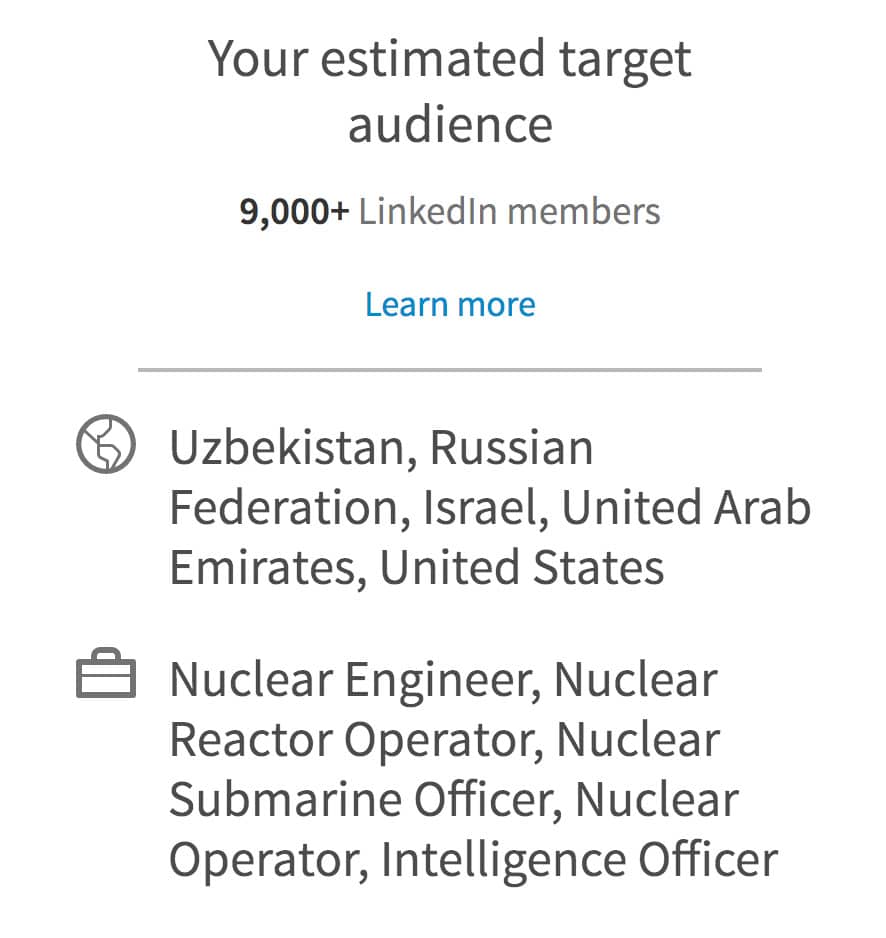

Now let’s talk LinkedIn paid advertising. LinkedIn Ads seems to prevent straight up hate targeting. However, there are any number of other potentially extreme targeting examples. In addition to serving ads to these folks, it’s easy to track down most in Sales Navigator and contact them via InMail to build online relationships.

LinkedIn will say that some of these targeting clusters are too small for actually running ads. No problem, because we can pad targeted classes bucketed with additional LinkedIn users to build up numbers and thereby enable ads.

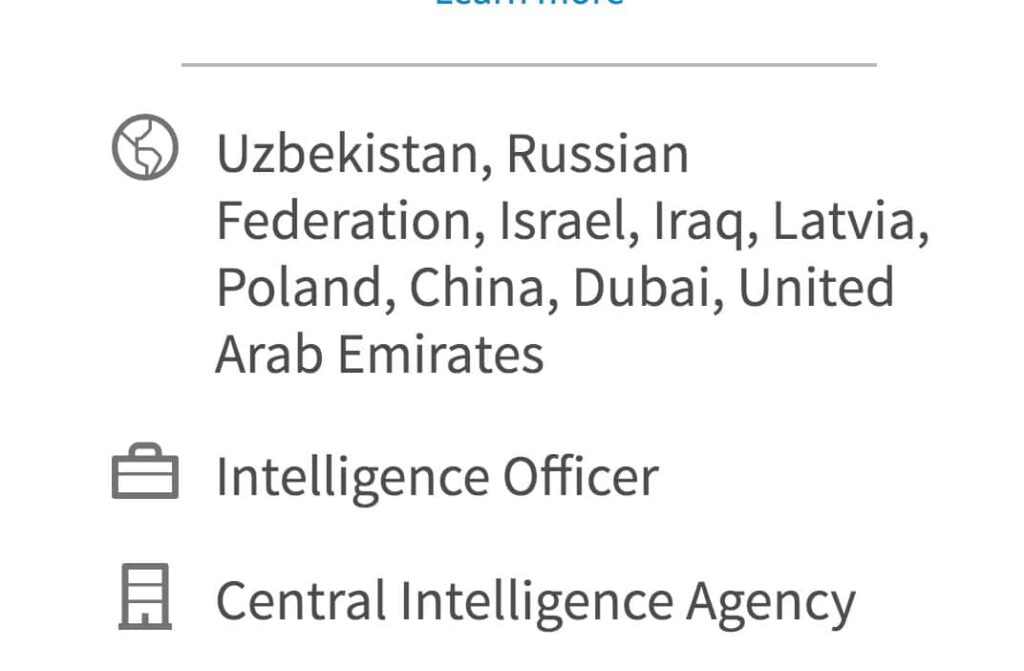

Run LinkedIn Ads to intelligence officers in various countries. You can also find some of them and contact via Sales Navigator.

LinkedIn allows business groups surrounding various positions surrounding abortion.

Target potentially slanted judges and their staff with LinkedIn Ads.

In case your country is shopping for global nuclear technologists, it’s easy to target such users with LinkedIn Ads.

Examples provided here just scratch the surface. Be creative in exploring Sales Navigator searches and LinkedIn Ads targeting.

In reality, it’s nearly impossible for a social platform’s crawl algorithms to effectively distinguish hatred from innocent endeavors. For example, when a documentary producer covers the subject of hating Jews, the keywords “Hate Jews” will be in the user’s profile describing a film that illuminates antisemitism.

Facebook has been working for years to gradually strip out nasty targeting attributes. The media just crucified Facebook but, in reality, the number of targetable humans was very low. Also, it’s hard to imagine any social channel without the same problem. The real issue at hand is that some, even many humans are nasty. It is also possible that some, if not all of these profiles, are fake or spoofs.

In response to bad PR, Facebook (temporarily?) stripped targeting data mined from crawling user profiles. Facebook’s reported fix removed employer, education and other targeting elements crucial to marketers (or did they?). Certainly this move cost Facebook millions in ad spend. It also ruined many a marketer’s campaign.

Social advertising is a doubled edged sword, almost impossible to fully detect. Social media mirrors physical life. In reality, the real problem is extreme humans.