What if I told you there is still a data loophole of sorts that exists on a platform billions use every day, that any unscrupulous advertiser could exploit?

A couple weeks ago, I saw a wonderful thread on Twitter, dissecting why the sharing of data in light of the 2016 elections is a big deal.

Don’t worry, this post isn’t political — but it did remind me there are many ways in which data gets shared, and it’s rather mysterious to your average citizen who doesn’t geek out in ad platforms all day.

Speaking of which, I’m a total nerd, so you’re going to have to bear with me as I now explain the Shadow Broker.

In the brilliant video game franchise Mass Effect, there’s a mysterious figure referred to throughout the plot lines knows as the “Shadow Broker.” The Mass Effect wiki defines him as: “an individual at the head of an expansive organization which trades in information, always selling to the highest bidder.” When his identity is finally revealed in the game and he’s subsequently defeated, one of the protagonists takes over the role to mitigate the historically evil role the Shadow Broker played.

Historic Shadow Brokers

Back in the day, the type of data out there was different, and could only be manipulated in limited ways. Things like address blocks in a certain zip code to target the wealthy, or mailing lists of newlyweds could be easily purchased, but didn’t carry much angst with them since their singular purpose in purchase was to send a postcard about a travel offer.

The shadow brokers of the time were mailing list providers.

Then, email marketing emerged, resulting in mailing list 2.0. Data integrity became more questionable since there was no way to know quality until the list was used. CAN-SPAM compliance entered the picture in 2003 to crack down on the relentless and uncontrolled spread of selling email lists.

Shadow Broker Creates Drones

As in any life cycle, there were limits to the data available at any given time (email addresses would die off, quality was junk, emails weren’t valid, etc). Humans are innovative by nature, though, and the quest for “more and better” continued, culminating in the creation of the tracking pixel.

The snapshot-in-time category of data had passed its usefulness.

It was time to move to more accurate, dynamic data reflecting the living, breathing entities driving the growth of the internet and the activity on it. Marketers wanted to move at the speed of humans, and not have to constantly buy lists to do so…which were, by the way, lists that may encompass behaviors no longer relevant. After all, what good is a one-year-old list of users who were car shopping? They bought one at some point already.

Enter the pixel: the lovely little code snippet that would sit within emails and website code to slap a user number on you, and follow you around. Powering things like in-market audiences meant purchasing data sets based on recent demonstrated behaviors, and not a static email that may or may not even be a person.

While this still goes on, the eye of regulation is studying it closely, evaluating the ethical implications behind using such personalized behavior for things like marketing. Marketers are continually challenged to create transparency around how data is used. GDPR was, in effect, enacted to create such boundaries.

But what about the opportunities to exploit data trading that still exist? They are out there.

Facebook as Today’s Shadow Broker

Let’s look back at that original Twitter thread I mentioned. The one thing it references repeatedly is also the vague notion spouted by talking heads a lot: “data sharing.”

The concept of data sharing sounds complicated, and it used to be for the average person. In its simplest form,we’re talking about sets of third-party data. Endless spreadsheets of cookie IDs, organized into behaviors, which really weren’t useful to nascent advertisers – they needed a tool that should synthesize that list and deliver ads against it.

Entirely doable in programmatic display, but the minimums required to enter into that secret club were pretty cost prohibitive in the beginning. Vendors also paid close attention, because they didn’t want to seem overly creepy. (I vividly recall discussions around creative retargeting, and avoiding it at the agency I was with at the time. Hahahahahahaha *takes a deep breath* hahahahahaha!)

Obviously, public acceptance of advertising tactics became more widespread. Sure, every once in awhile someone still asks me how Facebook Ads is able to show a product they looked at elsewhere, but social media users generally view it as normal now. As a result, those concerns lessened to an extent.

Did We Reach Peak “Minimal Concern”?

You would think, given the privacy woe explosion (woe-splosion?) that started with Cambridge Analytica, companies would be more careful than ever. Google operated ahead of the curve with things like removing keyword visibility, but also managed to stay more under the radar while Facebook plunged head-first proudly.

Despite outward appearances, Facebook still has a feature that allows everyday people to become today’s Shadow Brokers. One doesn’t need fancy cookie sets, backroom dealings of email lists, or anything requiring special coding knowledge.

In fact, we’re talking about a feature anyone can easily access if they sign up for a Facebook Ads Account.

Here’s how it works: You go to a website and see the now-ubiquitous “hey, we use cookies, cool bro?” message. You click “yes” out of habit. You cruise around, read stuff, do what normal people do, and leave, never giving another thought.

Later, while scrolling through Facebook, you see ads related to what you looked at (again, we’re all getting pretty used to seeing those). In short, websites collect data on you, something we’ve accepted for many years now.

And yeah, you’ve heard about the concept of data sharing, but whatever, it’s a site you’ve been to a million times, and what are they going to do with it, anyway?

But what if the website isn’t who you think it is? Or it’s buddied up to sites you wouldn’t want to be associated with?

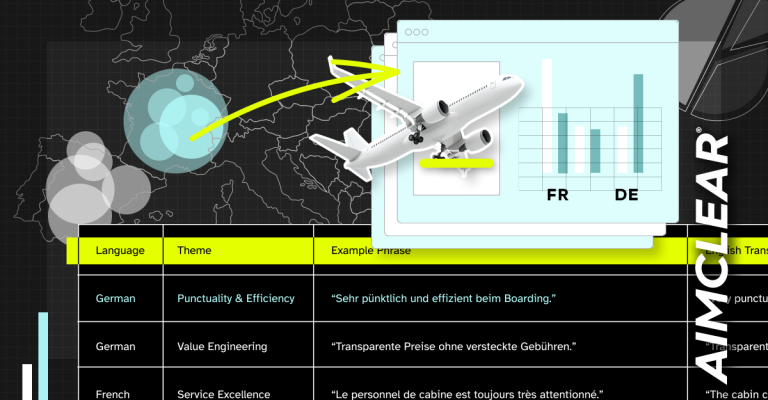

As you know, Facebook has a legitimate and useful tracking pixel for its Ads product. When you create an Ads account, you get a pixel and put it on every page of your website. It collects user behavioral data the advertiser can’t actually see. Facebook aggregates the data and then uses it when you want to target users with ads. Advertisers can carve up what URLs the audience visited, create buckets of users based on things like time spent on site…things that don’t really identify people.

Sounds harmless, right? At least when in the hands of ethical marketers.

But did you know the advertiser can then go on to basically share the data with any other ad account they want?

And then you can put in any ID you want, so that business unit has access to the pixel and its data:

So Yeah, it’s Harmless Until….

For those of us who work in marketing, this was really the main reason Cambridge Analytica was such a thing: someone finally jumped the shark. They used the tools we knew were there to manipulate and essentially craft confirmation biased propaganda to affect some major world outcome. It was essentially marketing via manipulation.

For quite a few, the shock wasn’t so much about the election, but more about the fact someone finally broke open the Pandora’s box legitimate and ethical marketers had tiptoed around for awhile. Going beyond things us regular marketers do daily to sell items, taken to the dark fringes we knew it could reach.

Data for Sale! Data for Sale!

I had a client that sold products that would randomly cause disapprovals and ad account disablement. While they weren’t violating any terms, it was easy for Facebook to automatically scan an ad and assume they were selling firearms. As a relatively small business, Facebook was a huge avenue of selling for them, so ad disapprovals would severely affected them. They produced the products themselves, so the highs and lows of demand caused ripple effects.

The client had set up multiple ad accounts to handle the situation before I came on board, but it was still an uphill battle because they didn’t quite understand how pixels worked.

Their most successful cohorts at the time were lookalike audiences, so you can imagine the devastation if their ad account was disabled: all that data was held hostage. They couldn’t even do remarketing.

Except they could. Because they had these other accounts, the pixel could just be shared with them. All audiences could be recreated, and they just sat on the bench until Facebook wrongly screwed with their main one. Then an account in waiting would be switched on until the disabled one was resolved and reinstated.

Perfectly legitimate use, but what if it was run by a scumbag agency or vendor? The client had no idea how the Facebook Ads account ecosystem really worked. It would have been easy for a jerkstore vendor to approach their competitors and get money to give them access to the pixel, right?

We Can’t Have Nice Things

I wish we could, but I’ve seen some pretty underhanded stuff after all these years. Cambridge Analytica exposed the shadowy places nefarious marketers could go, so it can boggle the mind that these other functions haven’t been removed, given some of Facebook’s other decisions around privacy and targeting.

I mean, seriously? I can’t see anything anymore in Insights about the 200,000 people that visit a client’s website a day, but theoretically I could rent pixel access to the highest bidder for them to gain a competitive advantage?

Makes total sense. </sarcasm>

Y’all don’t mind me. I’m just gonna sit over here with my popcorn and wait for the next headline of Facebook’s failing to regulate things on its own platform. I won’t even say I told you so. (Ok, I might.)