The public’s wariness about AI reflects a complex mix of fascination and distrust, often fueled by misunderstandings and contradictions.

A recent experience on LinkedIn exemplifies this: a long thread erupted, heaping praise on a blog I wrote about AI training – but also sharply criticizing it for supposedly being AI-written. The post, created as AI first exploded to mainstream consciousness, obviously has telltale AI elements, AND users totally loved the advice offered in the post. This duality underscores how public attitudes toward AI are riddled with assumptions, often unfairly discrediting both human effort and AI support.

Plagiarism is also a concern. It’s been hard to avoid horror stories of corporate secrets blown and unoriginal copy making it past editors to publication. Concerns about potential search engine bias—either favoring or penalizing AI-created content—further complicate the issue, leaving creators to wonder if their work is being judged on merit or mechanics. While AI can undeniably be a powerful tool for productivity and learning, its overuse has tarnished certain words, turning terms like “authentic,” “disruptive,” and even “curated” into clichés.

Despite this tension, AI tools are indispensable for many, from writers looking to refine their drafts to thought leaders merging their original insights with large language model data, and educators crafting more engaging content. For anyone striving to communicate clearly and effectively, AI can be your partner and, in the hands of highly skillful users, a strong, partial replacement for human efforts. This is especially true when AI is used to compartmentalize processes and work on sections of output.

First, we’ll have a look at GPT examining itself and flagging potential AI for its own output. Then we’ll look at commercial tools designed for the tasks of flagging AI and plagiarism.

ChatGPT Self Evaluation and Human Perception Scale

ChatGPT can evaluate its own copy according to what it has called a “Human perception scale.” Don’t depend on HPS, but in general self-checking with GPT can be a starting point. GPT human perception ratings are less of a rigid metric and more of a concept—a way to judge how human readers may perceive the quality and origin of writing. Its rudimentary goal is to gauge whether a piece of text feels human-made or machine-generated based on subjective impressions. If you don’t care about self-rating with ChatGPT 4, then skip below to, “Commercial AI/Plagiarism Detectors Compared.”

What Shapes ChatGPTs Human Perception Rating?

This framework may include factors like:

- Naturalness (or Fluency):

Does the text flow the way we expect human writing to? AI often nails fluency but sometimes misses those tiny quirks or imperfections that make writing feel real. - Originality:

Does it spark creativity or feel unique? Machine-written text can come across as repetitive or overly structured, while humans tend to bring fresh ideas or unexpected turns. Ask ChatGPT to analyze itself and it may generate a Human Perception Score, as coined by itself. - Emotional Resonance:

Can the text connect on a deeper level? People often infuse writing with personal touches or emotions that machines struggle to replicate. - Contextual Relevance:

Is it spot-on for the audience or the situation? A human writer will adapt tone and detail to fit, while AI might feel too broad or cookie-cutter. - Quirkiness and Errors:

Does it have a human touch, like a random tangent or a typo? Ironically, those little “mistakes” can make writing feel more genuine.

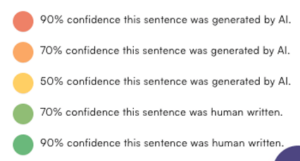

Scoring Human Perception

ChatGPT applies a scale to text based on how “human” it feels:

- 1-3: Robotic, formulaic, clearly generated by a machine

- 4-6: A toss-up—it could go either way

- 7-10: Natural, creative, and convincingly human

Simply prompt ChatGPT to give you a human perception score. Once you get the results, you can prompt ChatGPT to tell you which copy it flagged as human or not.

Commercial AI/Plagiarism Detectors Compared

Finding the right AI detector for your needs requires comparison. Some focus on pinpointing AI-written content, others highlight human originality, and a few do both. Below, we explore six commercial tools, breaking down their features, what makes them useful, and why they might be worth considering. Dedicated copy validation tools seem to outperform general AI models like ChatGPT in detecting AI content. While ChatGPT is designed for conversation and information, detection tools focus solely on identifying patterns unique to AI-generated text.

AI Detection Tools Comparison Chart

| AI Detector | Key Features | Pricing | Success Rate Claimed | User Ratings | Detection Methodology |

|---|---|---|---|---|---|

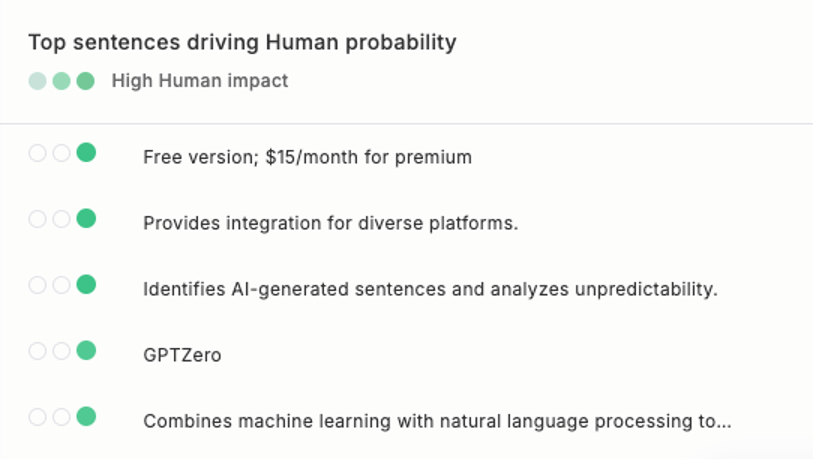

| Originality.AI | Scans for AI content alongside plagiarism and provides readability insights. Offers straightforward credit-based payment plans. | $14.95/month | High precision, over 99% with only a 2% false positive rate. | Rated highly by professional users for its dual functionality. | Combines machine learning with natural language processing to assess patterns in text. |

| GPTZero | Identifies AI-generated sentences and analyzes unpredictability. Provides integration for diverse platforms. | Free version; $15/month for premium | Designed to detect AI content with high confidence, though rates vary by text type. | Popular for educational settings and casual use. | Uses statistical models to measure text variability and burstiness. |

| Copyleaks | Analyzes text in multiple languages, detects code generated by AI, and performs plagiarism checks. | $16.99/month | Precise with a 0.2% false positive rate. | Strong user feedback for its multi-purpose functionality. | Relies on structured machine learning models and OCR capabilities for comprehensive analysis. |

| Turnitin | Specializes in academic texts, combining AI detection with traditional plagiarism checks. | $3/year per student | No detailed success rates but widely trusted by institutions. | Appreciated for its reliability in schools and universities. | Likely employs proprietary algorithms fine-tuned for academia. |

| ZeroGPT | Offers bulk analysis options with tools designed to spot AI generation quickly. | $9.99/month | Effectiveness depends on the AI model being detected. | Well-rated for fast and convenient results. | Applies deep learning to understand syntax and predict authorship. |

| Undetectable AI | Detects AI text and can rewrite it to avoid detection. Combines detection with a unique bypass feature. | $14.99/month | Success varies, given the nuanced dual-purpose design. | Generally appreciated for its innovative rewriting functionality. | Likely employs multi-model AI evaluation and paraphrasing tools. |

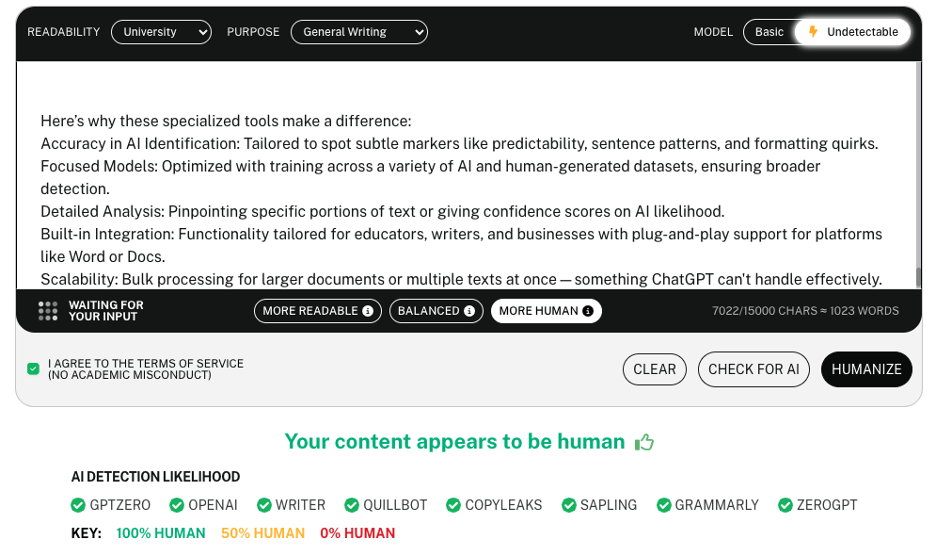

Here’s why these specialized tools may make a difference:

- Accuracy in AI Identification: Tailored to spot subtle markers like predictability, sentence patterns, and formatting quirks.

- Focused Models: Optimized with training across a variety of AI and human-generated datasets, ensuring broader detection.

- Detailed Analysis: Pinpointing specific portions of text or giving confidence scores on AI likelihood.

- Built-in Integration: Functionality tailored for educators, writers, and businesses with plug-and-play support for platforms like Word or Docs.

- Scalability: Bulk processing for larger documents or multiple texts at once—something ChatGPT can’t handle effectively.

AI Detection / Plagiarism Tools Shootout Methodology

We ran three different pieces of writing through each of these tools:

- This article, from the beginning up until the end of the list in the previous paragraph, which is partially AI-written and partially human. We’ll call it “50/50.”

- A blog post from 2022 that was 100% human written and edited, representing a “Legacy Blog Post.”

- An article that has been trained on human writing, but completely generated by AI, “100% AI.”

We chose the highest level of sensitivity for each of these tools.

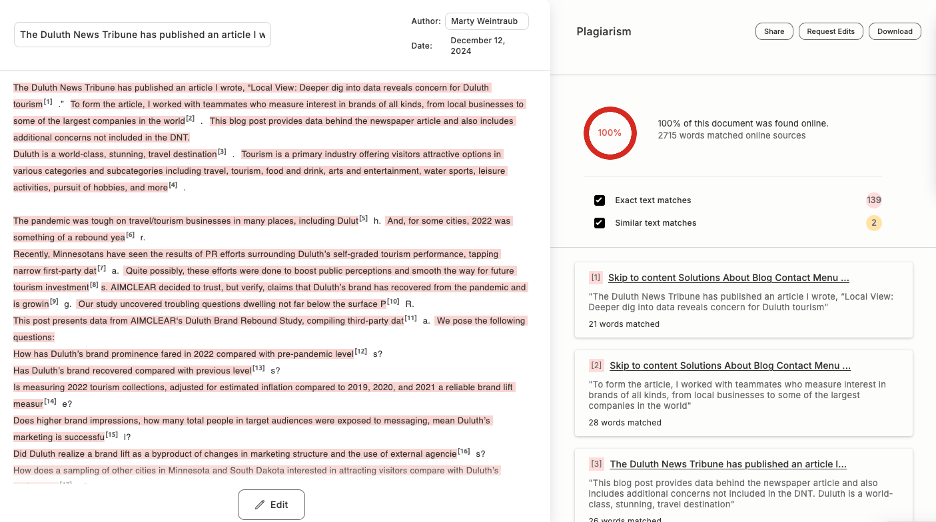

Originality.ai

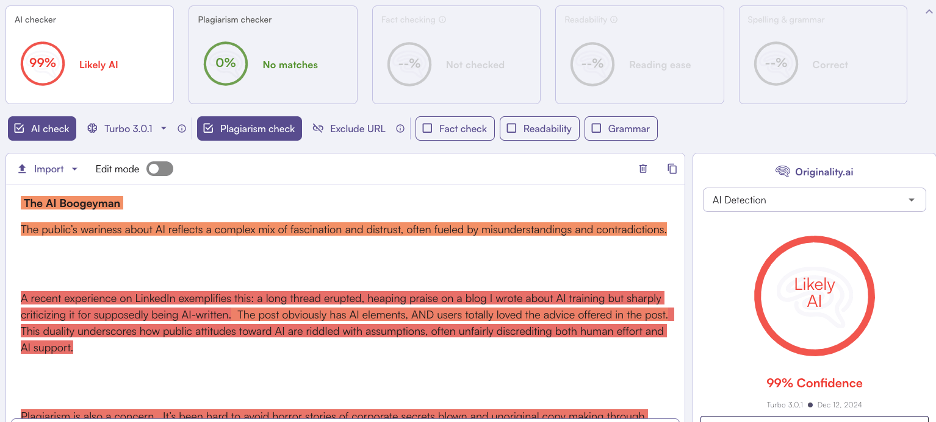

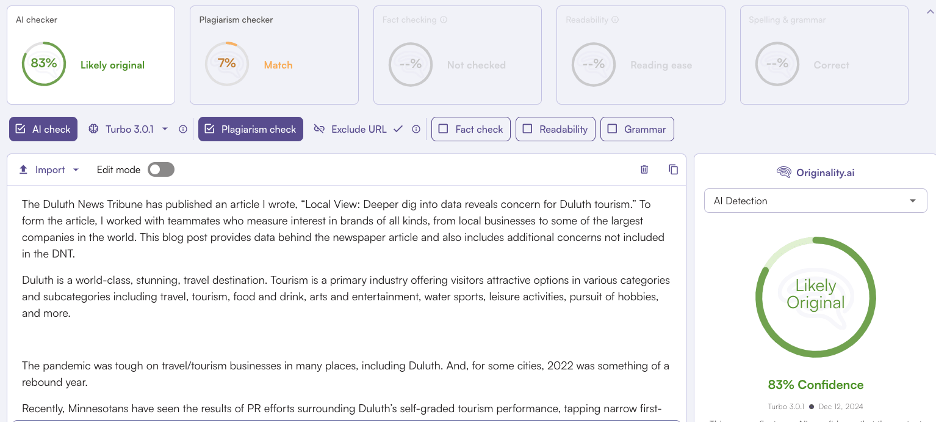

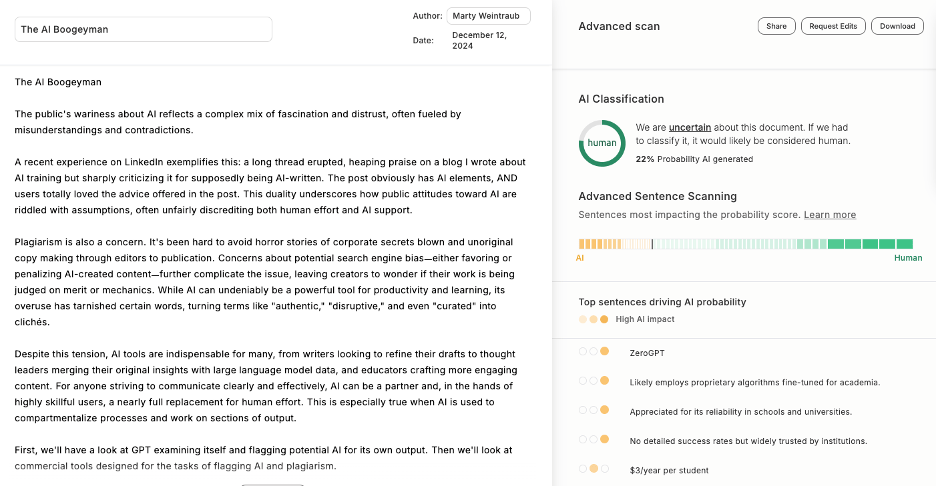

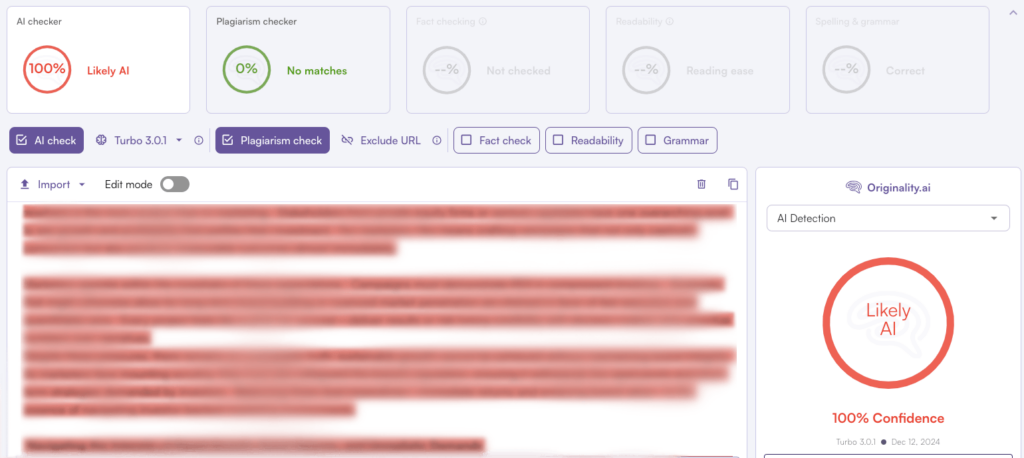

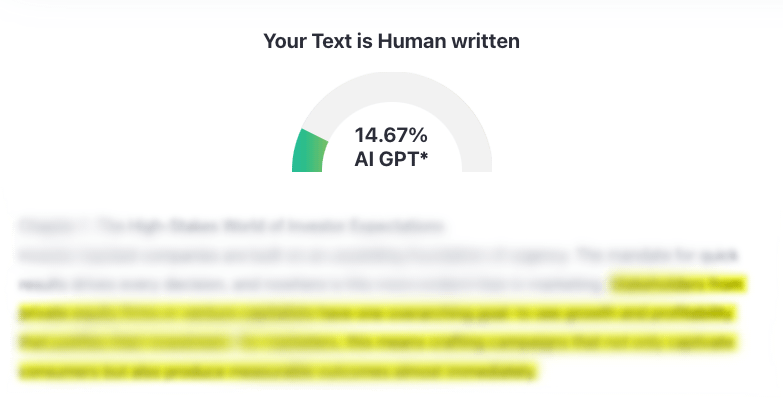

The 50/50 article:

An appreciated feature is a color-coded breakdown of which sections the tool specifically thought were AI

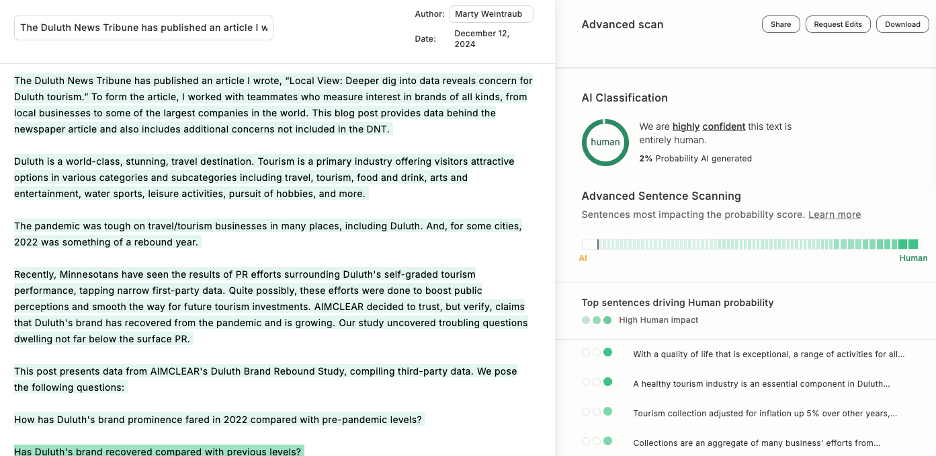

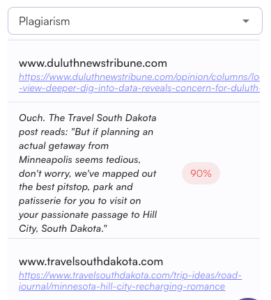

Results from our legacy blog post:

The plagiarism checker is very sensitive, but it will tell you specific sections that it believes are copied and links to the original sources. You can also enter the source URL if you are checking something already out on the web (our first run flagged 100% plagiarized when we didn’t exclude the blog url). The particular hits were references to other posts as well as some definitions of Google product that came directly from Google:

The 100% AI-written piece:

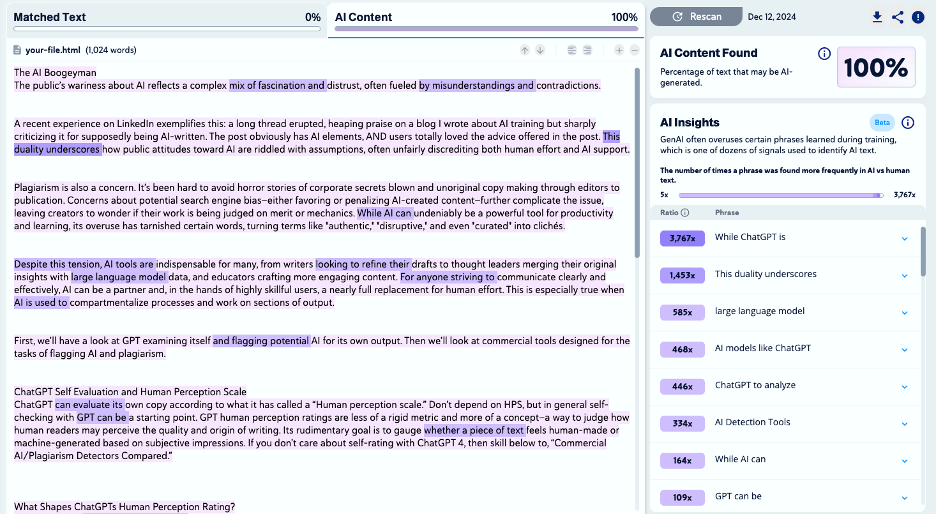

GPTzero

This tool was much less suspicious of our 50/50 article:

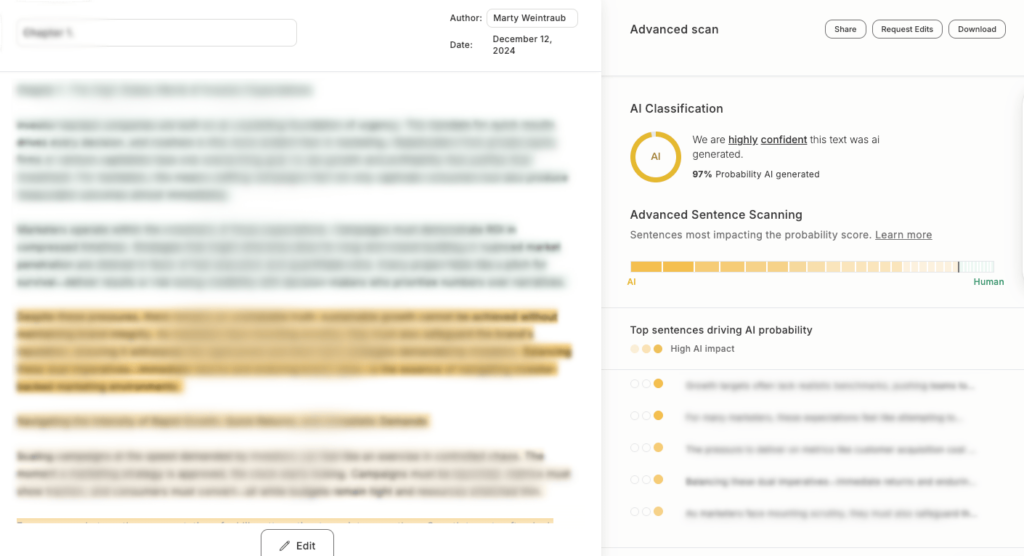

As you can see in the above image, GPTzero highlights which specific sentences are triggering its results. Interestingly, it flags “ZeroGPT” as being AI but “GPTZero” as being human:

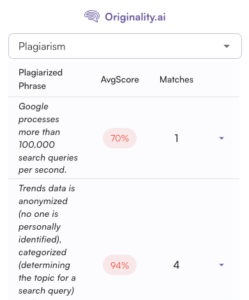

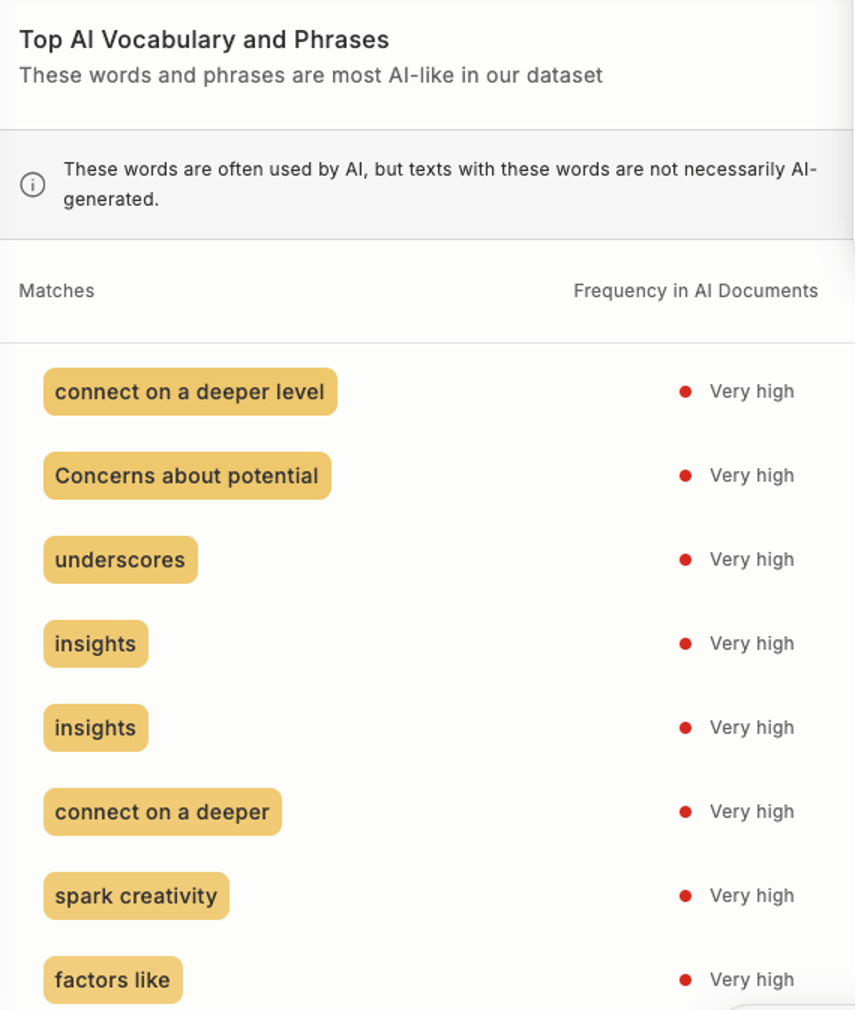

It separately also lists words and phrases that it flags as being likely AI-written:

It did agree that the legacy post was human-written:

However, GPTzero did not appear to have the ability to warn the system that this was already online so we were flagged as plagiarized:

It came as no surprise that GPTZero correctly identified the AI-written item as such:

Given how many phrases were called out in the partial AI post, we were surprised to see that while the overall classification was 97% certain of AI generation it only gave us two specific phrases; “potential to impact” and “creative vision”.

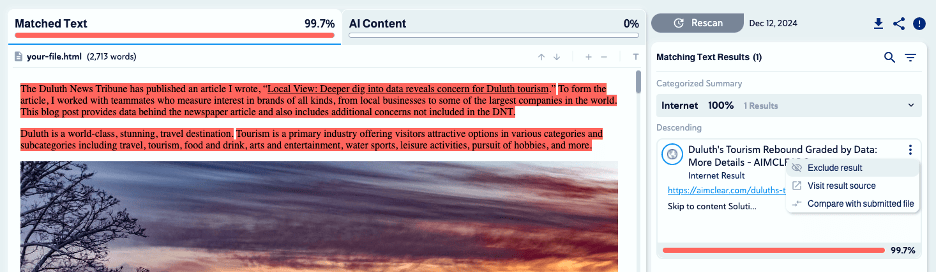

CopyLeaks

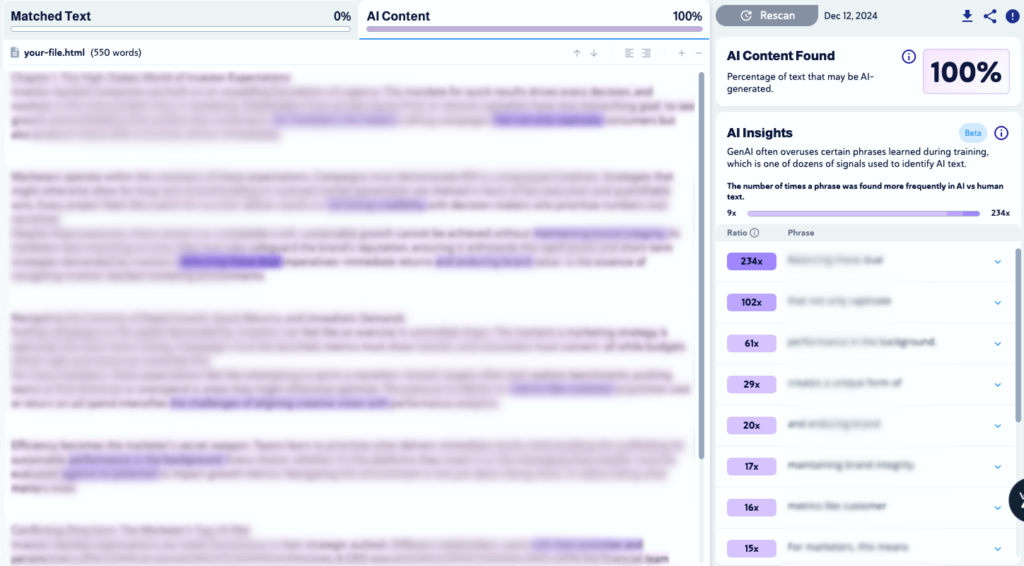

CopyLeaks was completely certain that our 50/50 blog post was AI-generated, although its detection method seems suspect when diving into the specific phrases; is it assuming that anyone using the phrase “large language model” is most likely using one?

The plagiarism feature discovered the source material, but then allowed us to exclude it from the results:

and it agreed that our tourism post was human-generated:

As expected, CopyLeaks found the AI text easily:

TurnItIn

We were unable to test this tool as there is no self-serve option for account creation.

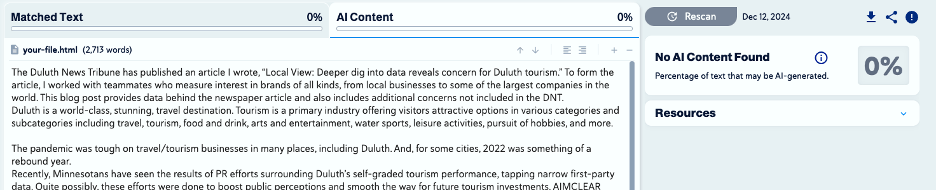

ZeroGPT

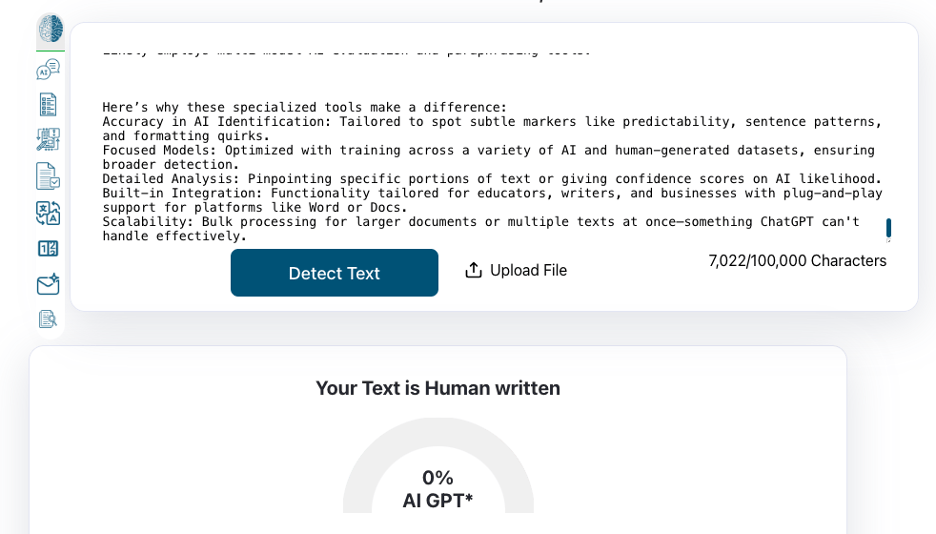

We were able to fool ZeroGPT completely with our partially AI-written post as it found no indicators of being generated:

It is worth noting that to use the plagiarism detector you must click into a separate area and run the tool again, but it would not work for us.

The original blog post also came back as 0% AI GPT and the full-blown generated piece squeaked by with minor suspicion:

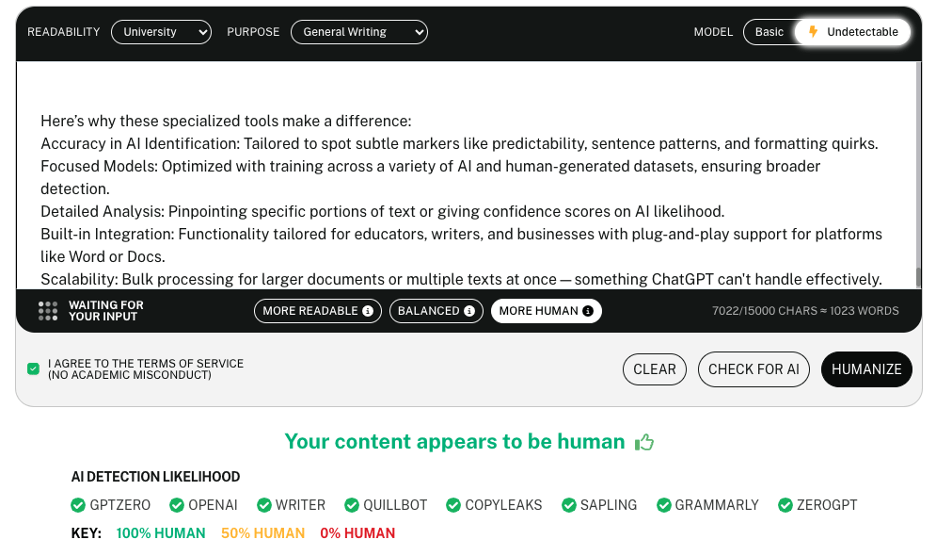

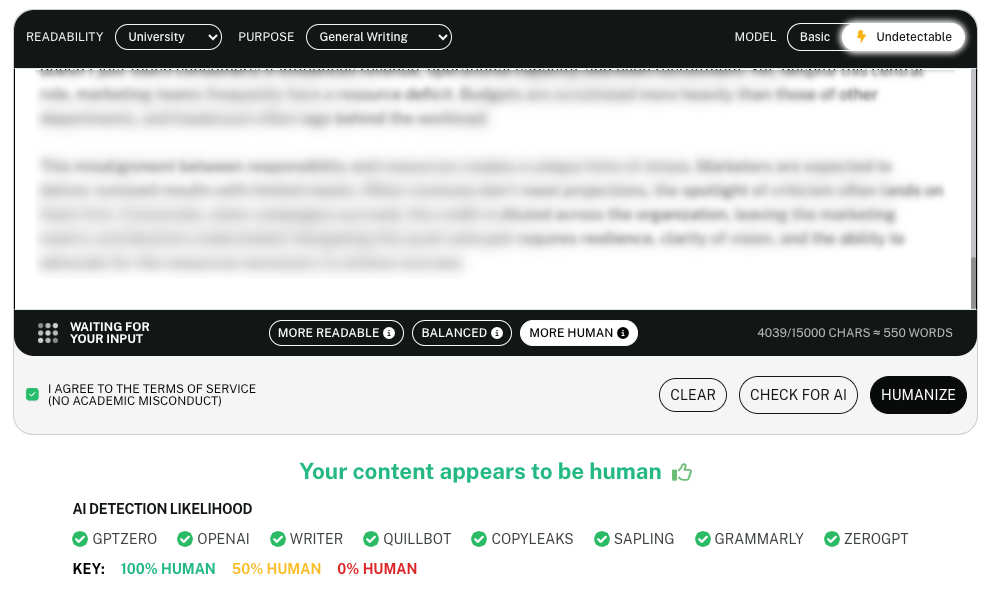

Undetectable AI

The final tool was undoubtedly the worst to work with and also the least accurate. Instead of giving its own opinion on the likelihood of content being written by AI, it tells you what it thinks other tools will think. Our 50/50 post garnered a pass and incorrectly told us that CopyLeaks would consider it likely human (despite CopyLeaks actually being certain of the opposite)

Although the text input tells us we have a 15,000 character limit, it was unable to process the legacy blog post (which was under that limit). After removing paragraphs one by one it finally succeeded after trimming nearly a third of the post:

Finally, our fully generated piece of text that was caught by every other tool finally evaded detection:

Clearly, tech providers are in a rush to get AI detection tools to market quickly, and we can only expect tools will continue to become more sophisticated – right along with smart content creators who will become more sophisticated at using AI as a tool in their content toolbox. We’re in an algorithm-fueled game of “Cat & Mouse.”