TL;DR:

- Most AI users accessing commercial AI models through web UIs never access the real AI control surface, behind the scenes parameters that actually shape output: Temperature, Top-P, Top-K, Max Tokens, Frequency Penalty, Presence Penalty.

- The web UIs hide these behind presets, which is why API outputs often feel “wrong” until you understand what those presets were doing for you.

- Model gardens (OpenRouter, Vertex AI Model Garden, Replicate) give you full parameter control without writing code, letting you work the same dials developers use.

- This post details the meaning of each parameter, shows how they affect each other in combination, and explains their interaction modes (tandem, parallel, override, conflict) so you can tune with intention instead of guessing.

- You’ll also learn how to avoid traps like low-temp dead zones, penalty collisions, and runaway token costs.

- Towards the end, you’ll find a full set of proven parameter recipes for technical writing, creative fiction, chatbots, data analysis, brainstorming, and marketing copy—ready to plug in and use.

- Text parameters don’t translate directly to images or video — visual models use entirely different controls (CFG Scale, diffusion steps, samplers, motion, FPS, duration), and this guide provides only a zoomed-out overview of those differences. The detailed sampling logic here applies to text generation only.

- Print this article as a .pdf and prompt with questions using the AI model of your choice. From the examples in this post, you can output starting point settings for a huge set of real-world use cases.

Background and Premise Behind Sampling Parameters

Every time you select “Creative” or “Precise” in ChatGPT, Claude, or Gemini, you’re choosing from a curated menu. Behind that menu sits a “control room” with nine or more parameters that determine everything about your output originality, length, vocabulary range, and tendency to repeat itself. The consumer web UI is training wheels. The API is the actual vehicle.

This matters because the gap between “pretty good” and “exactly what I needed” often comes down to a single parameter adjustment. I’ve watched teams spend weeks prompt-engineering their way around a problem that disappears with a 0.3 temperature reduction. I’ve seen marketing departments abandon API integration entirely because their outputs “felt wrong” compared to the web UI, not realizing they were running at default settings while the web UI was applying invisible presets tuned over months of user testing.

Here’s what trips up even experienced practitioners: when you move from web UI to API, those presets vanish. You’re suddenly flying manual, and the outputs feel wrong. The model is not failing. What you’re experiencing is lack of understanding what buttons got pushed for you in the web UI that are likely different in the API.

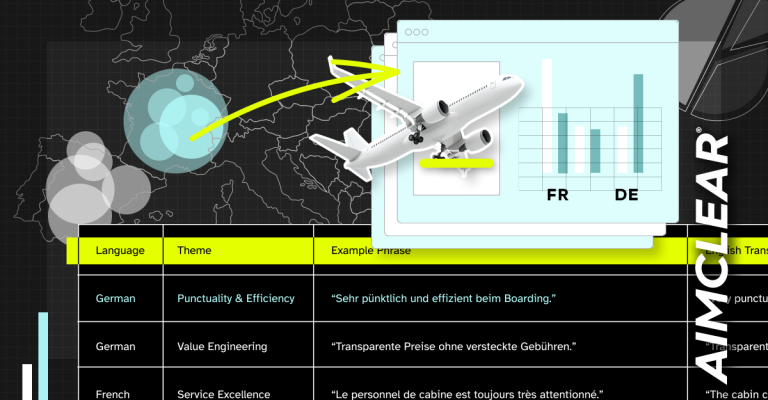

The Bridge: Model Gardens

Before we dive into parameters, let’s address the elephant in the room. Not everyone wants to write code. Not everyone should have to.

Model gardens—platforms like OpenRouter, Vertex AI Model Garden, or Replicate sit between consumer UIs and raw API access. They give users advanced parameter controls without requiring authentication setup, managing API keys, or writing a single line of Python. You get dropdown menus and sliders for the same settings developers configure in code.

Think of model gardens as the mixing board in a recording studio. You don’t need to understand signal processing to move faders. But you do need to know what each fader controls.

The practical difference: In ChatGPT’s web interface you might select “GPT-5” and choose a personality mode. In OpenRouter, you select “GPT-5” and then see sliders for Temperature, Top-P, Top-K, Frequency Penalty, Presence Penalty, Max Tokens, and more. Same model. Radically different control surface.

Example of the gap:

Web UI approach: You select “Creative” mode, type “Write a product description for noise-canceling headphones,” and get what you get.

Model garden approach: You set Temperature to 0.85, Top-P to 0.9, Frequency Penalty to 0.6, type the same prompt, and get output tuned for marketing copy that won’t repeat “immersive sound experience” four times in three sentences.

The output quality difference isn’t subtle. Once you’ve experienced granular control, consumer interfaces start feeling like coloring with crayons when oils and fine inks are available.

Part One: Core Parameters

Temperature (0.0 – 2.0): The Creativity Controller

Temperature is the foundational parameter. It adjusts the probability distribution across the model’s entire vocabulary before any other filtering happens. At the mathematical level, it reshapes the “logits,” the raw scores assigned to every possible next word.

The mechanical reality is that the model generates a score for every token in its vocabulary (often 50,000+ options). Temperature divides these scores before converting them to probabilities. Low temperature makes high scores relatively higher and low scores relatively lower. High temperature compresses the differences. In other words, temperature controls how boldly or cautiously the model picks its next word. The model starts with a score for every possible word it could say next, and temperature reshapes how far apart those scores feel. At low temperature, the model strongly favors the most obvious, predictable choices. At high temperature, it treats more unusual words as almost equally viable, giving them a real chance to appear.

Low temperature (0.0–0.2): The Deterministic Zone

The distribution sharpens dramatically. High-probability tokens dominate even more aggressively. Output becomes deterministic—same prompt, same response, every time. The model essentially always picks its top choice.

When to use it: Legal documents, medical data extraction, financial reporting, any situation where deviation is failure. You want the model’s single most confident answer, period.

Example at T=0.0:

Prompt: “Write one sentence about the future of AI.”

Output: “The future of AI involves continued advancement in machine learning and automation technologies.”

Notice the complete absence of flair. This is Wikipedia prose, accurate, conventional, forgettable. That’s exactly what you want when extracting contract terms or summarizing lab results.

Medium temperature (0.5–0.7): The Professional Zone

You’ll get balanced output, professional but not robotic. The model will occasionally select its second or third choice, introducing mild variation without risking coherence. This is the default sweet spot for most business applications.

When to use it: Business communications, documentation, support responses, anything that should sound competent and human without calling attention to itself.

Example at T=0.5:

Prompt: “Write one sentence about the future of AI.”

Output: “The future of AI promises transformative changes across industries as systems become increasingly capable of complex reasoning.”

Output is still grounded, but there’s a point of view emerging. “Promises transformative changes” carries more energy than “involves continued advancement.” The model took a small risk and it paid off.

High temperature (0.9–1.2): The Creative Zone

The distribution flattens significantly. Lower-probability tokens get a real fighting chance. Language becomes more varied, unexpected, occasionally brilliant. This is where creative writing, brainstorming, and marketing copy should live.

When to use it: Ad copy, creative briefs, fiction, ideation sessions, anywhere you want the model to surprise you with phrasing you wouldn’t have written yourself.

Example at T=1.0:

Prompt: “Write one sentence about the future of AI.”

Output: “The future of AI dances between silicon dreams and digital consciousness, reshaping how humanity defines intelligence itself.”

Now we’re somewhere interesting. “Dances between silicon dreams” isn’t a phrase the model would likely produce at T=0.2. The flattened distribution let it reach for something evocative. This is unusable for a technical whitepaper and perfect for a keynote opening.

Experimental (1.3+): The Edge Zone

Coherence starts slipping. The model may lose its thread mid-sentence or produce jarring word combinations. Artistic potential is high; reliability is low.

When to use it: Poetry, experimental fiction, situations where you’ll heavily edit anyway and want raw creative material to sculpt.

Example at T=1.5:

Prompt: “Write one sentence about the future of AI.”

Output: “AI’s future spirals through quantum possibilities birthing synthetic cognition in digital gardens where logic blooms sideways.”

“Logic blooms sideways” is either brilliant or nonsense depending on your use case. At T=1.5+, you’re generating raw material for human curation, not finished output.

The Use-Case Mapping

The infographic breaks this down by application domain: 0.0–0.2 for legal, medical, and data work. 0.3–0.5 for support docs and FAQs. 0.6–0.8 for blogs, emails, and reports. 0.9–1.2 for creative marketing and ideation. 1.3–2.0 for fiction, poetry, and artistic exploration. Match your temperature to your tolerance for surprise.

Max Tokens (1 – 128,000): The Length Governor and Budget Control

Max Tokens is your hard ceiling on output length. The model stops when it hits this limit or when it naturally completes its thought, whichever comes first. This seems simple until you realize it’s also your primary cost control mechanism. This is crucial because Max Tokens controls how long the model is allowed to talk, how much you pay and how predictable your system’s behavior is. Set it too low and the model chops sentences mid-thought. Set it too high and you invite runaway verbosity that quietly inflates your API bill. The key is giving the model enough runway to finish naturally while preventing unnecessary length, letting you manage both clarity and cost with one parameter.

Model-specific limits:

Different models have different ceilings. GPT-4 Turbo handles 4K output within 128K context. Claude 3 Opus manages 4K output within 200K context. Gemini 1.5 Pro pushes 8K output within 1M context. Context equals input plus output, so your prompt length constrains your available output space.

Example of strategic Max Token setting:

Scenario: You need product descriptions around 150 words.

Naive approach: Set Max Tokens to 150. Problem: the model hits the ceiling mid-sentence, producing “These headphones deliver exceptional sound quality with advanced noise-canceling technology that…”

Smart approach: Set Max Tokens to 250. The model completes naturally around 150 words, but has runway to finish thoughts. You pay for 150, not 250. The ceiling is insurance, not a target.

Pro tip: Set Max Tokens higher than your target length. The model stops naturally when it’s done and you only pay for actual generation, not your ceiling. Monitor usage patterns to find the sweet spot between adequate runway and cost efficiency.

Top-P / Nucleus Sampling (0.0 – 1.0): Dynamic Vocabulary Selection

Top-P, also called nucleus sampling, works by cumulative probability. Set P=0.9, and the model considers only the minimum number of tokens whose combined probability mass reaches 90%. Everything below that threshold gets excluded from consideration before sampling. This is important because Top-P automatically adapts to how certain or uncertain the model is at each step, tightening the vocabulary when the model knows what it wants to say and widening it when multiple reasonable options exist. That means it preserves coherence in predictable contexts while still allowing creativity and variety where the model has genuine flexibility, giving you far more stable and context-aware control than temperature alone can provide.

Top-P is more adaptive than temperature alone. When the model is highly confident about the next word, it might only consider three tokens to hit 90%. When it’s genuinely uncertain, it might need fifty tokens to reach the same threshold. The pool size adjusts dynamically at each generation step.

Visual demonstration of the mechanism:

Imagine the model is generating a word and has assigned these probabilities:

| Token | Probability | Cumulative |

| blue | 40% | 40% |

| azure | 30% | 70% |

| cyan | 15% | 85% |

| teal | 8% | 93% |

| navy | 4% | 97% |

| other | 3% | 100% |

At Top-P=0.9: The model includes “blue” (40%), “azure” (70%), “cyan” (85%)—still under 90%. It adds “teal” to hit 93%, which crosses the threshold. Sampling occurs only among these four tokens. “Navy” and everything else get excluded entirely.

At Top-P=0.5: Only “blue” and “azure” make the cut. The model chooses between just two options, producing more focused output.

At Top-P=1.0: Everything stays in the pool. Top-P has no filtering effect.

Settings guidance and their effects:

P=0.1 (Top 10% only): Extremely narrow. Almost deterministic. Use when you want temperature-like sharpening but prefer this mechanism.

P=0.5 (Selective): Focused vocabulary. Good for technical writing where you want precision without going fully deterministic.

P=0.9 (Balanced): The recommended default. Maintains variety while excluding low-probability noise.

P=1.0 (Everything): Top-P disabled. All tokens remain eligible. Use when you want temperature to be your only creativity control.

Example of Top-P impact:

Prompt: “The scientist discovered a _____”

At P=0.5: Likely completions: “breakthrough,” “solution,” “method,” “pattern” The model sticks to high-probability, conventional choices.

At P=0.95: Likely completions: “breakthrough,” “solution,” “method,” “pattern,” “paradox,” “anomaly,” “correlation,” “discrepancy” Lower-probability but still reasonable tokens enter the pool, enabling more varied output.

The key insight: Top-P adapts to context in ways temperature cannot. When the model knows what comes next, Top-P automatically narrows options. When genuine ambiguity exists, Top-P automatically expands them.

Top-K: The Hard Vocabulary Limit

Where Top-P is dynamic and probability-based, Top-K is absolute and count-based. Set K=50, and only the 50 highest-probability tokens get considered, regardless of their cumulative probability. It’s a blunt instrument compared to Top-P, but sometimes blunt is what you need. This matters because Top-K gives you a hard, predictable safety ceiling: no matter how chaotic or creative your settings are, the model can only choose from a fixed number of top candidates. That makes it useful when you need to prevent the system from wandering into extremely low-probability, off-the-wall outputs, even if your temperature or other settings would otherwise allow it.

Important caveat: OpenAI typically does not expose Top-K in its standard public API endpoints (favoring Top-P), though it may be available in specialized services or custom deployments. However, Claude, Gemini, LLaMA, and most open-source models offer both.

How K=5 works in practice:

| Rank | Token | Status |

| 1 | blue | INCLUDED |

| 2 | azure | INCLUDED |

| 3 | cyan | INCLUDED |

| 4 | teal | INCLUDED |

| 5 | navy | INCLUDED |

| — | CUT — | — |

| 6 | cobalt | EXCLUDED |

| 7 | sapphire | EXCLUDED |

| 8+ | … | EXCLUDED |

No probability math. Just a hard count cutoff.

Typical settings:

K=10: Very limited. Forces conservative vocabulary. Good for constrained generation tasks.

K=40: Natural range. Allows variety without chaos.

K=100+: Wide open. Top-K provides minimal filtering at this level.

Example of Top-K as safety net:

Scenario: You’re running a brainstorming session at T=1.2. High temperature means flattened probabilities—lots of weird tokens become viable. Without constraints, you might get output like: “The marketing strategy should leverage synergistic quantum paradigm flux capacitors.”

Solution: Set K=50. Even with high temperature, the model can only pick from its top 50 token choices at each step. This provides a safety ceiling while preserving the creative benefits of high temperature.

Output with T=1.2, K=50: “The marketing strategy should leverage unexpected channel combinations, treating customer attention as a renewable resource rather than extractable commodity.”

Still creative. Not unhinged. Top-K caught the flux capacitors before they escaped.

Repetition Penalties: The Variety Enforcers

AI models have a natural tendency to repeat themselves. The same words, phrases, and sentence structures recur because they’re statistically safe choices. Repetition penalties exist to counteract this gravitational pull toward the familiar. There are three flavors, and different providers implement different ones:

Frequency Penalty (OpenAI, 0–2):

Penalizes tokens proportionally to how many times they’ve already appeared in the output. The first instance of “innovative” passes freely. The second gets a small penalty. The third gets a larger one. The penalty scales with count.

When to use it: Technical content, documentation, anywhere you need variety without forcing unnatural vocabulary shifts. A frequency penalty of 0.3–0.5 gently discourages jargon loops.

Example of Frequency Penalty effect:

Prompt: “Explain the benefits of our product in three sentences.”

FreqPen=0.0: “Our product delivers innovative solutions for modern challenges. The innovative technology behind our product enables innovative approaches to complex problems. Our innovative team continues to innovate.”

FreqPen=0.5: “Our product delivers innovative solutions for modern challenges. The advanced technology behind it enables novel approaches to complex problems. Our dedicated team continues pushing boundaries.”

The repeated “innovative” got progressively penalized until the model sought alternatives.

Presence Penalty (OpenAI, 0–2):

Binary rather than proportional. Penalizes a token simply for existing anywhere in the output, regardless of how many times it appeared. One instance triggers the full penalty.

When to use it: When you want aggressive vocabulary diversity. Presence penalty forces the model to constantly seek fresh words. Higher risk of unnatural phrasing, but maximum variety.

Example of Presence Penalty effect:

PresPen=0.0: “The cat was very very very happy.”

PresPen=0.8: “The cat was extremely happy.” (Second “very” blocked immediately)

PresPen=1.5: “The feline seemed remarkably euphoric.” (Even “cat” and “happy” got pushed out)

At high levels, Presence Penalty can make output read strangely—the model is forced into semantic neighbors that don’t quite fit. Use with caution.

Repetition Penalty (Claude/Gemini, 0–2):

A single unified parameter that combines frequency and presence concepts. Simpler to configure, less granular control. The typical operating range differs from OpenAI’s split parameters.

Effect demonstration across the spectrum:

| Setting | Input Text | Output Transformation |

| Penalties OFF | “The cat was very very very happy” | Natural repetition allowed |

| Penalties MEDIUM | Same input | “The feline was remarkably joyful” |

| Penalties HIGH | Same input | “That feline seemed remarkably euphoric” |

The medium setting shows clear vocabulary diversity without distortion. The high setting shows vocabulary shift becoming noticeable as “cat” became “feline,” and the whole sentence restructured. Whether this is better depends entirely on context.

The stacking trap:

Frequency Penalty and Presence Penalty stack additively. Running both at 0.5 creates a combined penalty stronger than either alone. Running both at 1.0 can produce aggressively strange output as the model desperately avoids any repeated vocabulary.

Example of stacked penalties gone wrong:

FreqPen=1.2, PresPen=1.2: “Our enterprise solution provides unprecedented capability augmentation through synergistic methodology implementation across organizational hierarchies.”

The penalties pushed the model into corporate buzzword territory because all the natural words were blocked. Sometimes less is more.

Part Two: The Processing Pipeline: Order Matters

This is where most practitioners go wrong. These parameters don’t operate independently or simultaneously. They execute in strict sequence, each constraining what the next one sees. Understanding this pipeline explains why certain combinations behave unexpectedly.

The five-step sequence:

- Temperature reshapes the entire probability distribution (logit scaling)

- Top-K applies a hard count ceiling (eliminates all but top K tokens)

- Top-P filters from what remains based on probability mass (eliminates until cumulative P reached)

- Penalties adjust scores on the surviving candidates (apply frequency/presence adjustments)

- Sample selects the final token from the adjusted, filtered pool

Why sequence matters—an example:

Settings: Temperature=1.0, Top-K=100, Top-P=0.9

Step 1: Temperature flattens the distribution. What was 40%/30%/15%/10%/5% becomes something like 25%/22%/19%/17%/12%.

Step 2: Top-K=100 looks at this flattened distribution and keeps the top 100 tokens. With a flattened distribution, these 100 tokens cover more probability mass than they would at low temperature.

Step 3: Top-P=0.9 filters the remaining 100 tokens down to those covering 90% cumulative probability. Because temperature flattened things, this might still be 60+ tokens instead of the 4-5 you’d see at low temperature.

Step 4: Penalties adjust scores. A high-frequency token that survived all filtering might now get pushed down.

Step 5: Sampling happens across the final pool.

If you set Temperature=0.0, the distribution is so sharp that Step 1 essentially determines everything. Top-K and Top-P are cutting from a pool where one token already has 95%+ probability. Penalties can’t push the model away from a token that dominates that completely. Understanding the pipeline reveals why low temperature makes other parameters nearly irrelevant.

The Interaction Matrix: How Parameters Combine

Parameters don’t just execute in sequence—they create emergent behaviors when combined. The interaction patterns fall into four categories: Tandem, Parallel, Override, and Conflict. Mastering these patterns is what separates operators from architects.

Tandem Relationships (They Work Together Constructively)

Tandem parameters have complementary functions that combine productively. One creates an effect; the other contains it.

Temperature + Top-K: Controlled Creativity

High temperature flattens the probability distribution, making unusual tokens viable. Top-K provides an absolute safety ceiling, ensuring the model can’t wander into complete incoherence.

Example configuration: T=0.9, K=50

What happens: Temperature makes tokens ranked 30-50 much more competitive than they’d normally be. But token 51 and beyond can’t participate regardless. You get creative vocabulary without risking the model grabbing something from rank 500.

Prompt: “Describe a sunset”

T=0.9, K=off: “The sunset painted the sky in magnificent amalgamations of prismatic luminescence, each photon cascading through atmospheric particulate matter…” (Temperature let the model grab “prismatic luminescence” from deep in the probability rankings)

T=0.9, K=50: “The sunset painted the sky in ribbons of amber and rose, the colors bleeding together like watercolors left in the rain.” (Still creative, but grounded. Top-K kept the vocabulary within a reasonable sphere)

Frequency + Presence Penalty: Additive Variety Enforcement

These penalties stack, creating escalating pressure toward vocabulary diversity.

Example configuration: FreqPen=0.5, PresPen=0.5

What happens: A word appearing once gets the Presence Penalty (-0.5 to its score). A word appearing twice gets Presence (-0.5) plus Frequency (-0.5 × 2 instances = -1.0), for a combined -1.5. The more a word repeats, the harder the model resists using it again.

Prompt: “Write three sentences about customer satisfaction.”

Penalties=0: “Customer satisfaction drives business growth. Satisfied customers become loyal customers. Customer satisfaction should be every company’s priority.” (Three “customer satisfaction” instances, two “customers”)

FreqPen=0.5, PresPen=0.5: “Customer satisfaction drives business growth. Happy clients become loyal advocates. Prioritizing positive experiences should guide every company’s strategy.” (Penalties forced the model to find alternatives after the first sentence)

Warning: At high levels (both at 1.0+), stacked penalties can force vocabulary so aggressively that output becomes stilted or semantically imprecise. Monitor quality when pushing these.

Parallel Relationships (Same Goal, Different Mechanisms)

Parallel parameters pursue similar outcomes through different means. Used together, they compound unpredictably.

Temperature + Top-P: Dual Randomness Controls

Both parameters increase output variety, but through different mechanisms. Temperature reshapes the distribution; Top-P filters it. When you adjust both, effects multiply in ways that are difficult to predict.

OpenAI’s official guidance: Adjust one OR the other, not both simultaneously. Keep one at default when experimenting with the other.

Example of compounding problems:

T=1.2, P=0.95: High temperature flattens the distribution, making many tokens similarly probable. High Top-P keeps almost all of them in the pool. The model is now sampling from a huge, flat distribution—output becomes erratic.

Prompt: “The business strategy should focus on…”

T=1.2, P=0.95: “The business strategy should focus on leveraging quantum synergies across dimensional stakeholder matrices while implementing bio-dynamic paradigm shifts…”

T=1.2, P=0.7: “The business strategy should focus on unexpected partnership models, treating each customer segment as a distinct ecosystem with unique growth dynamics…”

Same temperature, but lower Top-P filters the flattened distribution down to the top 70% probability mass—still creative, but grounded.

Best practice: Pick your primary creativity control. If you’re using Temperature as your main lever, keep Top-P at 0.9 or 1.0. If you prefer Top-P for creativity control, keep Temperature at 0.7-1.0.

Override Relationships (One Cancels the Other)

Override happens when two parameters target the same filtering stage, and the more restrictive one completely dominates.

Top-P + Top-K: The Restrictive Wins

These filters operate in sequence (K first, then P), and whichever is more restrictive determines the outcome. The other parameter may be doing literally nothing.

Example 1: K overrides P

Settings: K=20, P=0.9

What happens: Top-K=20 first cuts the pool to exactly 20 tokens. Top-P=0.9 then asks “which of these 20 tokens sum to 90% probability?” In most cases, all 20 tokens from the Top-K cut sum to well under 90%, so Top-P doesn’t cut anyone. K=20 is doing all the work. P=0.9 is decorative.

Example 2: P overrides K

Settings: K=100, P=0.5

What happens: Top-K=100 keeps 100 tokens. Top-P=0.5 then cuts to the minimum set summing to 50% probability—typically 3-10 tokens depending on confidence. K=100 is essentially irrelevant; P=0.5 does all the filtering.

How to diagnose override:

Run the same prompt twice: once with just Top-K, once with both Top-K and Top-P. If outputs are identical, Top-K is overriding. Flip the test: run with just Top-P, then both. If outputs match, Top-P is overriding.

Best practice: Use one or the other. Pick Top-P for dynamic, probability-aware filtering. Pick Top-K for absolute ceiling guarantees. Don’t set both unless you’ve specifically verified which is actually active in your configuration.

Conflict Relationships (They Fight Each Other)

Conflict occurs when parameters work toward opposing goals, creating tension that degrades output quality.

Low Temperature + High Penalties: The Awkward Vocabulary Trap

Low temperature wants safe, high-probability tokens. High penalties block those exact tokens. The model gets trapped, forced into awkward vocabulary that technically satisfies both constraints but sounds wrong.

Example:

Settings: T=0.2, FreqPen=1.2, PresPen=1.0

Prompt: “Explain how a car engine works in simple terms.”

Expected from T=0.2: Clear, conventional, technical accuracy. The word “engine” appearing multiple times because it’s the subject.

What actually happens: The engine converts fuel energy into mechanical motion. The engine relies on controlled combustion. The engine requires regular maintenance…”

The model wanted to say ‘engine’ repeatedly… but $T=0.2$ concentrates the probability so intensely (e.g., $98\%$) that the penalty adjustments are too weak to change the winner. The model will repeat the word because the penalty failed to drop the token’s score below the next best option.

The conflict: Temperature says “stick to safe choices.” Penalties say “never repeat anything.” These goals are mutually exclusive when your subject requires repeated references.

Solution: Low temperature + low or zero penalties. Or high temperature + moderate penalties. Pick a lane.

High Temperature + High Penalties: Forced Incoherence

Extreme settings on both ends can create output that’s aggressively novel but semantically broken.

Settings: T=1.3, FreqPen=1.5, PresPen=1.2

What happens: Temperature makes rare tokens viable. Penalties aggressively block any repeated vocabulary. The model cycles through increasingly obscure synonyms while maintaining high randomness.

Output example: “The corporation manifested unprecedented paradigm orchestration. Said enterprise demonstrated extraordinary methodology curation. The organization exhibited unparalleled systematic implementation…”

Every sentence restates the same idea using completely different vocabulary. It sounds like a thesaurus exploded. High temperature let the model grab unusual words; high penalties prevented it from ever settling into natural language patterns.

When this is useful: Almost never. Maybe exploratory poetry generation where you’ll heavily edit.

The Diminishing Returns Trap: Temperature Zeros Everything Else

At Temperature ≈ 0, the probability distribution becomes so sharp that one token has 95%+ probability. In this state:

- Top-K is irrelevant (you’re only ever picking the top token anyway)

- Top-P is irrelevant (the top token already exceeds any P threshold alone)

- The Trap: You spend time tuning K, P, and penalties, seeing no effect, and conclude these parameters ‘don’t work.’ They work fine- you’ve just inadvertently zeroed them out by setting a Temperature that is too low to be challenged.

Example:

Settings: T=0.0, K=10, P=0.9, FreqPen=0.5

What’s actually active: Just T=0.0. Everything else is decoration.

The trap: You spend time tuning K, P, and penalties, seeing no effect, and conclude these parameters “don’t work.” They work fine—you’ve just zeroed them out with your temperature setting.

Diagnostic: If you’re at T=0.0-0.1 and other parameters seem inert, raise temperature to 0.5-0.7 and retest. You’ll suddenly see the other parameters take effect.

Proven Recipes: Tested Combinations for Common Use Cases

These configurations are battle-tested starting points. Adjust based on your specific model, prompt structure, and quality requirements.

Technical Writing

Goal: Accurate, consistent, professionally readable

| Parameter | Value | Rationale |

| Temperature | 0.3 | Low enough for accuracy, high enough to avoid robotic prose |

| Top-P | 1.0 (default) | Let temperature do the creativity control |

| Top-K | off | Not needed with low temperature |

| Freq Penalty | 0.3 | Gentle pressure against jargon loops |

| Presence Penalty | 0.0 | Don’t force vocabulary diversity in technical content |

Before (T=0.8, no penalties): “The API enables powerful integration capabilities. The powerful integration layer powers your powerful data transformations. This powerful approach provides powerful results.”

After (T=0.3, FreqPen=0.3): “The API enables seamless integration with existing systems. The data transformation layer handles complex operations automatically. This approach ensures reliable, consistent results.”

Creative Fiction

Goal: Original, evocative, non-generic prose

| Parameter | Value | Rationale |

| Temperature | 0.9 | High creativity for fresh phrasing |

| Top-P | 0.85 | Slight filtering to prevent linguistic nonsense |

| Top-K | off | Let Top-P handle dynamic filtering |

| Freq Penalty | 0.5 | Encourage vocabulary variety |

| Presence Penalty | 0.5 | Push toward fresh words |

Before (T=0.5, no penalties): “The rain fell on the city streets. People walked through the rain with umbrellas. The rain made the streets wet and shiny.”

After (T=0.9, both penalties at 0.5): “Rain cascaded down glass towers, turning the city into a vertical river. Pedestrians navigated the deluge beneath a canopy of umbrellas—black, red, forgotten. The streets gleamed like they’d finally shed their secrets.”

Conversational Chatbot

Goal: Natural, helpful, not repetitive over long conversations

| Parameter | Value | Rationale |

| Temperature | 0.7 | Balanced for natural dialogue |

| Top-P | 0.9 | Standard filtering |

| Top-K | off | Not needed |

| Freq Penalty | 0.3 | Light variety |

| Presence Penalty | 0.4 | Slightly stronger push against repeated phrases |

Before (no penalties after many turns): “I’d be happy to help with that! I’d be happy to explain further. I’d be happy to answer any other questions!”

After (penalties applied): “I’d be happy to help with that! Let me explain further. Any other questions I can tackle?”

Data Analysis

Goal: Reproducible, deterministic, identical outputs for identical inputs

| Parameter | Value | Rationale |

| Temperature | 0.0–0.2 | Near-deterministic |

| Top-P | 1.0 | No filtering |

| Top-K | off | No filtering |

| Freq Penalty | 0.0 | No variety pressure |

| Presence Penalty | 0.0 | No variety pressure |

The point: You want the exact same output every time. All variety controls are disabled. This is essential for automated pipelines where downstream processes expect consistent formats.

Brainstorming

Goal: Maximum idea variety, novelty over coherence

| Parameter | Value | Rationale |

| Temperature | 1.2 | High creativity |

| Top-P | off | Let temperature run |

| Top-K | 50 | Safety ceiling only |

| Freq Penalty | 0.8 | Aggressive variety |

| Presence Penalty | 0.6 | Push hard for fresh concepts |

Before (T=0.7, low penalties): “Marketing ideas: 1. Social media campaign 2. Email marketing 3. Content marketing 4. Influencer partnerships”

After (T=1.2, aggressive penalties): “Marketing ideas: 1. Reverse testimonials (customers explain what life was like before) 2. Competitive vulnerability reports (publish your weaknesses) 3. Micro-sponsorships of niche Discord servers 4. Customer-written product roadmaps”

The high settings produce unusual ideas. Some will be brilliant; some will be unusable. That’s the brainstorming trade-off.

Marketing Copy

Goal: Creative but coherent, fresh but professional

| Parameter | Value | Rationale |

| Temperature | 0.8 | Strong creativity |

| Top-P | 0.9 | Standard filtering |

| Top-K | off | Not needed |

| Freq Penalty | 0.7 | Strong anti-repetition |

| Presence Penalty | 0.4 | Moderate variety |

Before (low penalties): “Our innovative product delivers innovative results. The innovative technology behind our innovative approach makes innovation accessible.”

After (marketing recipe): “Our product delivers results that surprise even us. The technology behind it transforms complexity into opportunity. Finally, innovation you can actually use.”

The Golden Rules

Rule 1: One Parameter at a Time

Adjust one parameter. Observe the output change. Then layer another. Multi-parameter changes make debugging impossible. When something goes wrong (and it will), you need to know which dial broke it.

Rule 2: Start With the Baseline

Begin here: Temperature=0.7 | Top-P=0.9 | FreqPen=0.3 | PresPen=0.3

This is a sensible center point. Run your prompt. Evaluate output. Move one dial. Run again. Map what each variable actually does to your specific use case before building complex configurations.

Rule 3: Watch for Override

When Top-K and Top-P are both set, one of them is probably doing nothing. Test with each individually to determine which is actually active. Don’t waste tuning time on inert parameters.

Rule 4: Diagnose the Diminishing Returns Trap

If you’re at Temperature 0.0-0.2 and other parameters seem to have no effect, that’s not a bug—it’s the pipeline. Low temperature concentrates probability so intensely that filtering and penalties have nothing to work with. Raise temperature to restore their function.

Rule 5: Match Penalties to Temperature

Low temperature + high penalties = conflict. High temperature + high penalties = chaos. Find your lane:

- Precision work: Low temp (0.0-0.3), zero or minimal penalties

- Professional work: Medium temp (0.5-0.8), moderate penalties

- Creative work: High temp (0.9+), moderate to high penalties with Top-K as a ceiling

From Consumer to Architect

The commercial web UIs were never going to tell you any of this. They wanted you to click “Creative” and move on. That’s fine for casual use, but it’s not where professional work gets done.

The gap between “pretty good AI output” and “exactly what I need, every time” lives in these parameters. Temperature isn’t just a creativity slider—it’s a probability distribution transform. Top-P isn’t just vocabulary control—it’s dynamic, confidence-adaptive filtering. Penalties aren’t just repetition fixes—they’re cumulative forces that can push output into brilliance or awkwardness depending on your other settings.

Now you know what’s behind the curtain. You understand why outputs feel different between web UI and API. You can diagnose why your chatbot started repeating itself, why your technical documentation sounds robotic, why your brainstorming sessions produce the same five ideas every time.

Model gardens give you the control surface without the code. APIs give you programmatic access at scale. Either way, you’re no longer guessing.

One parameter at a time. Observe. Adjust. Master.

Quick Start: Temp=0.7 | MaxTokens=1000 | TopP=0.9 | RepPenalty=1.1 — then adjust ONE thing at a time.

Note on images and video

Image and video generation models use different control systems than text models. Some parameters overlap in concept (like “creativity” or randomness controls), but many text parameters, such as Top-K, Top-P, Frequency Penalty, Presence Penalty, and even Max Tokens, do not exist in the same form for visual models. Instead, image/video systems rely on parameters like guidance scale, diffusion steps, scheduler type, frame count, resolution, and other modality-specific controls. In other words, the sampling logic described in this guide applies to text generation, and visual generation has its own separate parameter set and behaviors.

Image Generation parameters:

- CFG Scale (1-30, default 7.5) — prompt adherence strength

- Steps (10-150, optimal 30-50) — denoising iterations

- Seed (-1 or specific number) — reproducibility control

- Sampler (Euler a, DPM++, DDIM) — algorithm selection

Video Generation parameters:

- Motion (0-255) — movement intensity

- FPS (8-60) — frame rate

- Duration (2-16 seconds) — clip length

- Init Strength (0-1) — source influence

None of these maps directly to the text sampling parameters (Temperature, Top-P, Top-K, penalties). They’re fundamentally different control systems for fundamentally different generation processes, diffusion-based synthesis versus autoregressive token prediction.

One conceptual overlap worth noting is that CFG Scale (Classifier-Free Guidance) controls how strictly the model follows the prompt versus generating freely, which is spiritually similar to temperature’s creativity/determinism tradeoff, but the mechanisms are different. Temperature reshapes token probability distributions. CFG Scale adjusts the balance between conditioned and unconditioned noise predictions in diffusion.

If you take nothing else from this guide, take this: you don’t need another “prompt hack,” you need to own the dials. Once you understand what Temperature, Top-P, Top-K, penalties, and Max Tokens actually do, you stop treating AI like a mysterious oracle and start using it like a precision instrument. Start from the baseline recipe, change one parameter at a time, and watch how your outputs shift from generic to tailored, from “pretty good” to “this is exactly what we needed.” The models are already powerful; the difference between being a consumer and an architect is whether you’re willing to step into the control room and actually use the panel.