Do those old H1 tags still work? Actually word’s out, they don’t really count much anymore for SEO. Does quantity, power or diversity of inbound links take the hill for search engines ranking pages algorithmically for keywords? Actually, correlation data indicates that link diversity is a factor which has become more important.

SEO Ranking Factors in 2009 at SMX Advanced 2009 was a classic shredding session, originally blossomed from a session pitch submitted about “attainable SEO”. The esteemed panel was comprised of Rand Fishkin (SEOmoz), Laura Lippay (Yahoo), and Marty Weintraub (AIMCLEAR). Danny Sullivan, Executive Editor of SearchEngineLand moderated. Read on for a summary of which state-of-the art SEO ranking factors still work… and which page, site and keyword attributes are dead by the side of the road.

Danny explained that this session “was a challenge,” the difficulty is that people ask “can you just tell me what the formula is” which is quite a topic to be conveyed in entirety during one session. Danny notes that some basic ranking factors, important in 1996, still seem relevant today.

Speaking first is Rand Fishkin of SEOmoz. Rand said that Danny asked him to present data that is exciting in his opinion, but a little bit early. For their forthcoming 2009 SEO ranking factors report, they sent out a survey to 100 SEO’s around the world. Rand will be presenting data from the 70 responses they received, with the full data being published in the next few weeks.

Expert opinion on keyword usage –

- Experts had similar opinions to what they’ve seen in years past. Most folks are saying keyword use in the title tag is highly important. Root domain keyword use is perceived as far more important than in the subdomain. There is a majority consensus regarding the meta keywords tag, with 51 of 70 respondents saying this is not used at all. With meta description there is slightly less agreement.

Correlation data on keyword usage –

- Rand shows off a spiffy yellow-orangeish pie chart. An interesting finding is that H1, H2, H3 etc. tags are just a sliver of the important on-page features. Alt text has quite a high correlation with successful ranking. Correlation is not causation! Rand doesn’t want to suggest that because someone has great alt text is why they rank well.

- Rand also looked at importance of keyword usage in various parts of the URL. An interesting finding was that Microsoft seems very interested in the keyword usage within the entire URL path.

- Regarding importance of keyword position within the title tag, the data suggests there’s a high correlation with ranking well and having the keyword your are targeting as the very first words in your title tag.

- Substantive disagreement compared to expert opinion exists in a number of places. H1 tags had a low correlation with rankings; Rand says you should definitely still optimize them, just don’t bank on them. Alt text showed a higher correlation with rankings, indicating that there is good reason to spend time on this. Results also showed high correlation for keyword usage in the domain & subdomain path, anywhere you can get it.

Expert opinion on non-link factors –

- Non-link factors include signals (not links or keywords) related to the domain and how they are relevant. Experts mostly found freshness of page creation to have a significant impact, with existence of unique content on a page to have the largest consensus of a very strong impact. Experts found that HTML Validation to W3C standards to have a weak impact.

Rand went through that correlation data pretty fast, the search marketing world awaits the finished report.

Expert opinion on link metrics –

- Link popularity of course, was perceived as highly significant.

Correlation data on link metrics –

- Google and Yahoo places great importance on external domain link trust while Microsoft was more page focused. When Rand was initially looking for an absolute among metrics, he found only 1 metric that has any ability to predict rankings and that is the number of different domains linking to a URL.

Data takeaways on link metrics –

- Page rank may be important, toolbar page rank is a poor predictor. Domain Authority, Trust Rank, and Moz Rank may be important but these metrics still need some work.

Expert opinion on considerations of subdomains vs root domains –

- Overwhelmingly, SEO’s agreed that content on subdomains inherit some link popularity features of the root domain but not all.

Correlation data on subdomains vs root domains –

- Rand says that data correlations were in step with SEO expert opinion.

Data takeaways on subdomains vs root domains –

- Subdomains likely do not inherit all of the ranking benefits of the root domain, neither are they completely separate entities.

Expert opinion on the high level view of Google’s algorithm –

- In order, expert’s say that trust /authority of the domain, link popularity of the specific page, anchor text of external links, and on page keyword usage are the four most important signals. Newer signals like hosting data and social graph metrics were not perceived as important.

Data correlation on the high level view of Google’s algorithm –

- Rand sees that overall percentage link popularity is in step with the top three choices of SEO’s. Interestingly, they have seen page content having slightly less correlation to rankings than keywords in the URL.

Expert opinion on geographic ranking factors for country specific targeting –

- Experts mostly agreed that the ccTLD of the root domain was the most important for SEO impact.

Expert opinion on the future of ranking signals –

- Most experts agreed that links will decline in importance but still remain powerful as newer signals rise from usage data and other sources

Rand closed with a warning about his Link Juice drink, consumption of which should be limited to 3 cans per day and not intended for women who are pregnant or nursing.

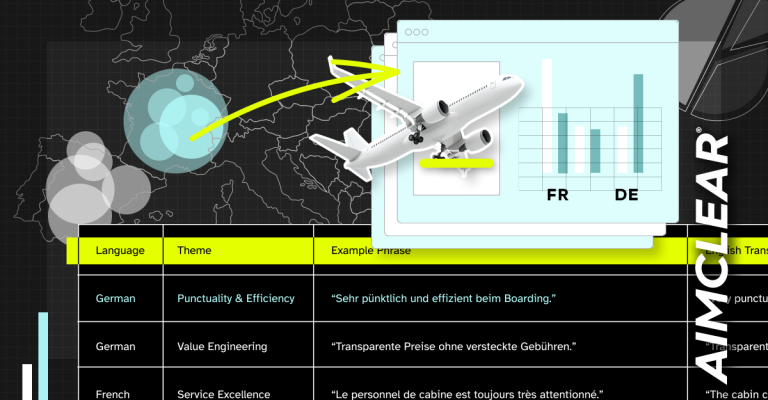

Speaking next was Marty Weintraub, President of AIMCLEAR. Marty’s presentation was entitled Attainable SEO: Page Strength vs SERPs Difficulty.

Marty says that good SEO’s cared about attainability back in 1996. They were the good old days, achieving high rankings was so easy it was like stealing *sigh*.

Marty said that before we start, understand that pulling spreadsheets is a far cry from programmatic automation. However, understanding page strength and SERPs competitiveness is a powerful tool, you can use this to advise anything in your site.

You need to ask the question, What can we rank for on this page, dude?

Marty says that what is state of the art is still pretty crude. Public understanding on the semantic and power nodes of search is pretty weak, especially at the enterprise level, but with cool API’s it won’t be crude for long.

Sad facts of SEO Life: You can’t slay a dragon with a slingshot. That is, you can’t rank for “Las Vegas Hotels” with your new blogspot domain. Mediocre SEO’s make the mistake of using keywords based solely on search frequency and then wonder why their site is sucking in the SERPs.

The internet is sooooo competitive, you need to understand attainability as a critical SEO ranking factor. Still, attainable doesn’t matter if nobody cares.

Attainability & search frequency should be used in tandem; attainability doesn’t really mean anything without frequency.

Marty acknowledges that Webmaster Central is useful and cool, but it does not reveal the crucial SEO data we all crave. Search Engines have a “don’t ask don’t tell policy”, how many times can they tell us to make “relevant pages?” You would think that Google would let us buy better data.

Marty says the Dawn of Transparent Next-Gen tools is upon us. The metrics are coming to give us what we really want independent of what the search engines can feed us.

Marty likes SEOmoz’s Linkscape as the anti-black box for SEO’s, revealing the metrics we’ve been asking for, with a volume of crawled data that’s quickly catching up to Yahoo.

Marty also likes MajesticSEO, another amazing tool that gives away remarkable data. It has alernatet trust scores spawned from seed sets and incorporates metrics likes traffic, engagement, and even social citations.

Marty says that all SEO’s need to be correlating ranking factors against what’s happening in the SERPs, real SEO’s test the crap out of the SERPs.

Build a spreadsheet of metrics you hypothesize show correlation of pages to Google SERPs:

1. It’s about easy semantic evaluation from many sources, not in Google Webmaster central!

2. You can use the linkscape internal/external page site scrapes for determining how much page & domain trust there is. Why can’t we see all these things in webmaster central?

3. Use lots of other unique data sources- MajesticSEO, The Wayback Machine, Whois, Alexa, Compete etc.

Choose your personal grid and compare against the SERPs with every attribute you find. Expect Marty to publish this entire process in specific detail at AIMCLEAR Blog in the near future.

Up next was Laura Lippay, technical marketing director for Yahoo!. Laura’s presentation was entitled “This Ain’t Your Mother’s SEO.” Laura informed us not to be alarmed if she fell over while presenting because she got food poisoning, though I was interested in a warning about which restaurant it was from.

Laura says that when she first came to Yahoo!, she brought in Rand’s original factors. She has since learned that SEO is about much more than code tweaks and traditional ranking factors. If it was really about that, what’s to stop her mom from creating a thoroughly optimized motorcyle site that outranks Yahoo Autos?

It really is more than that; it’s being viral, having buzz, getting bookmarked naturally, being your own linkbait.

What is that top secret (but not really) ranking factor? Laura says simply, it’s a Good Product.

Laura presented a case study on a Yahoo property that they were doing an optimization overhaul on. This property was in a competitive vertical, but their optimization effort was a tight production. They ended up seeing a big dip in traffic after the relaunch. What happened?

She says that Rand’s SEO ranking factors and specifications were mostly followed, but no one ever asked the question “what will it take for us to outrank the top competitor, to create buzz?” It ultimately required a far bigger strategy. Their biggest competitor was (still is) killing them, but at least they have the SEO basics built in.

For example, if Laura’s mom had to choose a knitting site to sign up for which one?

Knitting World – which has yarn articles, knitting news, knitting stories

Knitting Life – which has all those same things + upload knitting videos/photos, knitting communities of interest to her online

Now, if you were a search engine and you could see that her mom spends more time on Knitting Life, which would you rank higher?

The more competitors you have, the less you should rely on traditional SEO tactics and more on creating a buzz-worthy product in order to rank well. Testing the relationships between high ranking and hot products, does a good product = good SEO?

Yahoo! did consumer surveys to compare their products against competitors. They compared top mindshare vs top search ranking across several topics. For the topic of sports, the top 3 companies in surveyed consumer mindshare correlated directly with Yahoo’s top search rankings. With finance, they correlated although ordered differently, the same things were observed when comparing top movie sites.

What does this all have to do with ranking factors again? SEO is not a band aid for a run of the mill product; think about strategy, look at what your competitors are doing, how can you build something that will outrank your competitors?

You can’t forget the big picture, the best ranking factor you can have is a hot product. Make the product manager and other big people your best friends (buy them beer). Ask yourself questions about strategy before working on SEO.

Q & A Session (note this section is largely paraphrased)

Question: I’ve observed Google giving new sites 1 to 3 day ranking boosts, how can we deal with this, how can anyone compete with this if you are not the New York Times?

Danny Sullivan: Pages that are particularly fresh, Google will pop them in the top results. I don’t really think it’s a case of just new sites, but that Google is displaying hot content that they think is relevant, separate from search results.

Rand Fishkin: QDF (quality deserves freshness) has a few algorithmic components that are obvious, like domain trust, particularly news sites. If you get even a few early references, you can outrank stuff that normally kicks your butt in search results. You can see these in searches for, well tragically Air France. Quite possibly you’ll see news results about the plane crash in the search results (Marty shows the audience a number 1 unpersonalized result for “Brazil Confirms Jet Crash – Yahoo News”). If you are trying to do that, have domain authority, get your feed into Google news, hire a blogger, and think about syndication. Syndication partnerships can get you good links fast.

Marty Weintraub: We filter out for immediacy in unpersonalized SERPs.

Q: Does SEO factor around trust of a domain, and does Marty work for SEOmoz?

Danny: Marty likes that tool because there is data in it that you don’t get elsewhere.

Marty: It’s the concept of tool strings by API, wiring up what you need to power your API. Just because I like it (linkscape) doesn’t mean it’s not cool, the days of big enterprise are all over, we can all get used to having this stuff now.

Danny: Over the past 2-3 years there was a concept of “certain domains are authoritative” they can do no wrong in Google, as long as they’re focused on particular content. People inside these organizations don’t want to screw this up. Aaron Wall created waves a few months ago when he showed a strong correlation between top brands ranking in Google when they hadn’t before. Was this Google deciding “we need to reward the brands turn the brand dial up?” I think they didn’t necessarily crank the brand dial up, but brands have general authority, maybe they cranked the authority dial up.

Marty: Let me get this right, they aren’t necessarily favoring brands, just everything that a brand happens to do? Brands are brands.

Danny: Proctor & Gamble might outrank Crest for toothpaste because they are more of an authority.

Rand: When you see a high correlation of brands ranking well, not Google saying turn up the brand dial, but it’s Proctor and Gamble got a listing on Fortune 500 and they have a stock ticker. I get worried when SEO’s think “Google is out to get them.” Google is out to serve their users. What is it that brands do that I can do?

Laura Lippay: At the high level, Yahoo brands, it didn’t really affect us, I didn’t see anything that affected us.

Marty: What signal does Yahoo look to decide who’s a big brand?

Q: If I register my domain name for a milion years, do i rank better?

Rand: The experts seemed to think this correlated very low.

Marty: I think it does.

Q: Any evidence that Nofollow links contribute to linking domain diversity, like Wikipedia, mainstream media, does it matter at all?

Rand: Surprisingly, it does appear to, when you pull out Nofollow links, correlation drops a little bit. My initial suspicion is yes, that a Nofollow link from Wikipedia is still a good signal

Laura: Well, if you pull out a link that’s Nofollow, you’re also losing traffic too, there are so many things going on at the same time. It’s hard to say that’s it just one thing, there’s a lot more to it

Rand: If you believe that we’re right, do not go out and get a bunch of Nofollow links, there’s clear signals of what is natural growth of Nofollow links and what’s non-natural growth.

Danny: I think Google’s official thing is that “no no, we still don’t follow it.” If you are clever you can ask Matt Cutts that during the official You & A session.

Q: Is their a positive role that microsites can play without being spammy?

Rand: I would not suggest that, look at large networks, Conde Naste, dozens of companies linking in different ways.

Marty: Does it serve the users?

Rand: Do not optimize links in the footer, we see lots of q & a’s about people getting penalties for this, the natural flow of the web is that a lot of related sites will naturally link to each other.

Laura: I always try to get people with related content to create modules, we see results when we do that, we put a content module in Yahoo sports of clothing we’re selling in Yahoo rivals. I don’t really waste my time on footer stuff.

Q: Laura, how do think Google might measure buzz?

Laura: If you think about it, the big metrics people look at, repeat visits, bookmarks.

Rand: I would say branded query data is huge, the benefit from getting a link from Techcrunch isn’t so much “get a link from Techcrunch” , it’s everything around that.

*Marty continues asking search algorithm questions of Laura even though she is part of Yahoo’s marketing team*

Laura: Really smart people have been working on these search engines for years, how could they not be thinking about these things that we’ve though of?

Rand: Something like 80% of all sites, Google has access to some kind of usage data be it analytics, webmaster central etc.

Q: How do I do an analysis for a 15,000 page site?

Marty: You can totally do it at the enterprise level.

Rand: This is not new, lots of people use the Linkscape API.

Q: Is SEO dead, it sounds like everything is back to traditional marketing?

Danny: I’m gonna say that literally people were saying SEO is dead in 1996, there are still people who have to do the basics of SEO, page titles, make sure the site gets crawled. These are still rocket science the vast majority of people who are out there.

Laura: I don’t think it’s dead, it’s ever changing.

Rand: How do you get to changing? I looked at the ranking factors report between 2007 and 2005, man the fundamentals look alike.

Marty: Really, your title tag is your ad headline, and the meta description is the three lines in your ad.